-

PDF

- Split View

-

Views

-

Cite

Cite

Andrea Farruggia, Travis Gagie, Gonzalo Navarro, Simon J Puglisi, Jouni Sirén, Relative Suffix Trees, The Computer Journal, Volume 61, Issue 5, May 2018, Pages 773–788, https://doi.org/10.1093/comjnl/bxx108

Close - Share Icon Share

Abstract

Suffix trees are one of the most versatile data structures in stringology, with many applications in bioinformatics. Their main drawback is their size, which can be tens of times larger than the input sequence. Much effort has been put into reducing the space usage, leading ultimately to compressed suffix trees. These compressed data structures can efficiently simulate the suffix tree, while using space proportional to a compressed representation of the sequence. In this work, we take a new approach to compressed suffix trees for repetitive sequence collections, such as collections of individual genomes. We compress the suffix trees of individual sequences relative to the suffix tree of a reference sequence. These relative data structures provide competitive time/space trade-offs, being almost as small as the smallest compressed suffix trees for repetitive collections, and competitive in time with the largest and fastest compressed suffix trees.

1. INTRODUCTION

The suffix tree [1] is one of the most powerful bioinformatic tools to answer complex queries on DNA and protein sequences [2–4]. A serious problem that hampers its wider use on large genome sequences is its size, which may be 10–20 bytes per character. In addition, the non-local access patterns required by most interesting problems solved with suffix trees complicate secondary-memory deployments. This problem has led to numerous efforts to reduce the size of suffix trees by representing them using compressed data structures [5–17], leading to compressed suffix trees (CST). Currently, the smallest CST is the so-called fully compressed suffix tree (FCST) [10, 14], which uses 5 bits per character (bpc) for DNA sequences, but takes milliseconds to simulate suffix tree navigation operations. In the other extreme, Sadakane’s CST [5, 11] uses about 12 bpc and operates in microseconds, and even nanoseconds for the simplest operations.

A space usage of 12 bpc may seem reasonable to handle, for example, one human genome, which has about 3.1 billion bases: it can be operated within a RAM of 4.5 GB (the representation contains the sequence as well). However, as the price of sequencing has fallen, sequencing the genomes of a large number of individuals has become a routine activity. The 1000 Genomes Project [18] sequenced the genomes of several thousand humans, while newer projects can be orders of magnitude larger. This has made the development of techniques for storing and analyzing huge amounts of sequence data flourish.

Just storing 1000 human genomes using a 12 bpc CST requires almost 4.5 TB, which is much more than the amount of memory available in a commodity server. Assuming that a single server has 256 GB of memory, we would need a cluster of 18 servers to handle such a collection of CSTs (compared with over 100 with classical suffix tree implementations!). With the smaller (and much slower) FCST, this would drop to 7–8 servers. It is clear that further space reductions in the representation of CST would lead to reductions in hardware, communication and energy costs when implementing complex searches over large genomic databases.

An important characteristic of those large genome databases is that they usually consist of the genomes of individuals of the same or closely related species. This implies that the collections are highly repetitive, that is, each genome can be obtained by concatenating a relatively small number of substrings of other genomes and adding a few new characters. When repetitiveness is considered, much higher compression rates can be obtained in CST. For example, it is possible to reduce the space to 1–2 bpc (albeit with operation times in the milliseconds) [13], or to 2–3 bpc with operation times in the microseconds [15]. Using 2 bpc, our 1000 genomes could be handled with just three servers with 256 GB of memory.

Compression algorithms best capture repetitiveness by using grammar-based compression or Lempel–Ziv compression.1 In the first case [19, 20], one finds a context-free grammar that generates (only) the text collection. Rather than compressing the text directly, the current CSTs for repetitive collections [13, 15] apply grammar-based compression on the data structures that simulate the suffix tree. Grammar-based compression yields relatively easy direct access to the compressed sequence [21], which makes it attractive compared to Lempel–Ziv compression [22], despite the latter generally using less space.

Lempel–Ziv compression cuts the collection into phrases, each of which has already appeared earlier in the collection. To extract the content of a phrase, one may have to recursively extract the content at that earlier position, following a possibly long chain of indirections. So far, the indexes built on Lempel–Ziv compression [23] or on combinations of Lempel–Ziv and grammar-based compression [24–26] support only pattern matching, which is just one of the wide range of functionalities offered by suffix trees. The high cost to access the data at random positions lies at the heart of the research on indexes built on Lempel–Ziv compression.

A simple way out of this limitation is the so-called relative Lempel–Ziv (RLZ) compression [27], where one of the sequences is represented in plain form and the others can only take phrases from that reference sequence. This enables immediate access for the symbols inside any copied phrase (as no transitive referencing exists) and, at least, if a good reference sequence has been found, offers compression competitive with the classical Lempel–Ziv. In our case, taking any random genome per species as the reference is good enough; more sophisticated techniques have been studied [28–30]. Structures for direct access [31, 32] and even for pattern matching [33] have been developed on top of RLZ.

Another approach to compressing a repetitive collection while supporting interesting queries is to build an automaton that accepts the sequences in the collection, and then index the state diagram as an directed acyclic graph (DAG); see, for example, [34–36] for recent discussions. The first data structure to take this approach was the generalized compressed suffix array (GCSA) [37, 36], which was designed for pangenomics so queries can return information about sequences not in the collection but that can be obtained from those in the collection by recombination.

The FM-index of an alignment (FMA) [38, 39] is similar to the GCSA but indexes only the sequences in the collection: whereas the GCSA conceptually embeds the automaton in a de Bruijn graph, the FMA embeds it in a colored de Bruijn graph [40], preserving its specificity. Both the GCSA and the FMA are practical but neither support the full functionality of a suffix tree. The precursor to the FMA, the suffix tree of an alignment (STA) [41, 42], allows certain disjunctions in the suffix tree’s edge labels in order to reduce the size of the tree while maintaining its functionality. Unlike the FMA, however, the STA has not been implemented. Both the STA and the FMA divide the sequences in the collection into regions of variation and conserved regions, and depend on the conserved regions being long enough that they can be distinguished from each other and the variations. This dependency makes these structures vulnerable to even a small change in even one sequence to an otherwise-conserved region, which could hamper their scalability.

1.1. One general or many individual s

It is important to note that the existing techniques to reduce the space of a collection of suffix trees on similar texts build a structure that indexes the collection as a whole, which is similar to concatenating all the texts of the collection and building a single suffix tree on the concatenation. As such, these structures do not provide the same functionality of having an individual CST of each sequence.

Exploiting the repetitiveness of a collection while retaining separate index structures for each text has only been achieved for a simpler pattern-matching index, the suffix array (SA) [43], by means of the so-called relative FM-indexes (FMIs) [44]. The SA is a component of the suffix tree.

Depending on the application, we may actually need a single for the whole collection, or one for each sequence. In bioinformatics, a single is more appropriate for search and discovery of motifs across a whole population, for example, by looking for approximate occurrences of a certain sequence in the genomes of the population or by discovering significant sequences that appear in many individuals. Other bioinformatic problems, for example related to the study of diseases, inheritance patterns or forensics, boil down to searching or discovering patterns in the genomes of individuals, by finding common approximate subsequences between two genomes, or looking for specific motifs or discovering certain patterns in a single genome.

An example of recent research making use of the relative storage of individual genomic datasets is how Muggli et al. [45] (see also [46, 47]) adapted relative FMIs to an FMI variant that Bowe et al. [48] had described for de Bruijn graphs, thus obtaining a space-efficient implementation of Iqbal et al.’s [49] colored de Bruijn graphs. These overlay de Bruijn graphs for many individuals to represent genetic variation in a population.

1.2. Our contribution

In this paper, we develop a CST for repetitive collections by augmenting the relative FMI with structures based on RLZ. This turns out to be the first CST representation that takes advantage of the repetitiveness of the texts in a collection while at the same time offering an individual CST for each such text. Besides retaining the original functionality, such an approach greatly simplifies inserting and deleting texts in the collection and implementing the index in distributed form.

Our compressed suffix tree, called relative suffix tree (RST), follows a trend of CSTs [6–9, 11, 13] that use only a SA and an array with the length of the longest common prefix (LCP) between each suffix and the previous one in lexicographic order (called LCP). We use the relative FMI as our SA, and compress LCP using RLZ. On top of the RLZ phrases we build a tree of range minima that enables fast range minimum queries, as well as next- and previous-smaller-value queries, on LCP [13]. All the CST functionality is built on those queries [6]. Our main algorithmic contribution is this RLZ-based representation of the LCP array with the required extra functionality.

On a collection of human genomes, our RST achieves less than 3 bpc and operates within microseconds. This performance is comparable to that of a previous CST [15] (as explained, however, the RST provides a different functionality because it retains the individual CSTs).

2. BACKGROUND

A string is a sequence of characters over an alphabet. For indexing purposes, we often consider text strings that are terminated by an endmarker not occurring elsewhere in the text. Binary sequences are sequences over the alphabet . If is a binary sequence, its complement is binary sequence , with .

For any binary sequence , we define the subsequence of string as the concatenation of the characters with . The complement of subsequence is the subsequence . Contiguous subsequences are called substrings. Substrings of the form and , , are called prefixes and suffixes, respectively. We define the lexicographic order among strings in the usual way.

2.1. Full-text indexes

The suffix tree (ST) [1] of text is a tree containing the suffixes of , with unary paths compacted into single edges. Because the degree of every internal node is at least two, there can be at most nodes, and the suffix tree can be stored in bits. In practice, this is at least bytes for small texts [50], and more for large texts as the pointers grow larger. If is a node of a suffix tree, we write to denote the concatenation of the labels of the path from the root to .

SAs [43] were introduced as a space-efficient alternative to suffix trees. The SA of text is an array of pointers to the suffixes of the text in lexicographic order.2 In its basic form, the SA requires bits in addition to the text, but its functionality is more limited than that of the suffix tree. In addition to the SA, many algorithms also use the inverse SA, with for all .

Let be the length of the (LCP) of strings and . The LCParray [43] of text stores the LCP lengths for lexicographically adjacent suffixes of as (with ). Let be an internal node of the suffix tree, the string depth of node , and the corresponding SA interval. The following properties hold for the lcp-interval: (i) ; (ii) for all ; (iii) for at least one ; and (iv) [51].

Abouelhoda, Kurtz and Ohlebusch [51] showed how traversals on the suffix tree could be simulated using the SA, the LCP array, and a representation of the suffix tree topology based on lcp-intervals, paving the way for more space-efficient suffix tree representations.

2.2. Compressed text indexes

Data structures supporting rank and select queries over sequences are the main building blocks of compressed text indexes. If is a sequence, we define as the number of occurrences of character in the prefix , while is the position of the occurrence of rank in sequence . A bitvector is a representation of a binary sequence supporting fast rank and select queries. Wavelet trees (WT) [52] use bitvectors to support rank and select on general sequences.

The Burrows–Wheeler transform (BWT) [53] is a reversible permutation of text . It is defined as (with if ). Originally intended for data compression, the BWT has been widely used in space-efficient text indexes, because it shares the combinatorial structure of the suffix tree and the SA.

Let LF be a function such that (with if ). We can compute it as , where is the number of occurrences of characters with lexicographical values smaller than in BWT. The inverse function of LF is , with , where is the largest character value with . With functions and LF, we can move forward and backward in the text, while maintaining the lexicographic rank of the current suffix. If the sequence is not evident from the context, we write and .

Compressed SAs (CSA) [54–56] are text indexes supporting a functionality similar to the SA. This includes the following queries: (i) determines the lexicographic range of suffixes starting with pattern; (ii) returns the starting positions of these suffixes; and (iii) extracts substrings of the text. In practice, the find performance of CSAs can be competitive with SAs, while locate queries are orders of magnitude slower [57]. Typical index sizes are less than the size of the uncompressed text.

We support locate queries by sampling some SA pointers. If we want to determine a value that has not been sampled, we can compute it as , where is a sampled pointer found by iterating LF times, starting from position . Given sample interval, the samples can be chosen in suffix order, sampling at positions divisible by , or in text order, sampling at positions divisible by and marking the sampled SA positions in a bitvector. Suffix-order sampling requires less space, often resulting in better time/space trade-offs in practice, while text-order sampling guarantees better worst-case performance. We also sample the ISA pointers for extract queries. To extract , we find the nearest sampled pointer after , and traverse backwards to with function LF.

CST [5] are compressed text indexes supporting the full functionality of a suffix tree (see Table 1). They combine a CSA, a compressed representation of the LCP array, and a compressed representation of suffix tree topology. For the LCP array, there are several common representations:

LCP-byte [51] stores the LCP array as a byte array. If , the LCP value is stored in the byte array. Larger values are marked with a 255 in the byte array and stored separately. As many texts produce small LCP values, LCP-byte usually requires to bytes of space.

We can store the LCP array by using variable-length codes. LCP-dac uses directly addressable codes [59] for the purpose, resulting in a structure that is typically somewhat smaller and somewhat slower than LCP-byte.

The permutedLCP (PLCP) array [5] is the LCP array stored in text order and used as . Because , the array can be stored as a bitvector of length in bits. If the text is repetitive, run-length encoding can be used to compress the bitvector to take even less space [6]. Because accessing PLCP uses locate, it is much slower than the above two encodings.

Typical compressed suffix tree operations.

| Operation . | Description . |

|---|---|

| The root of the tree | |

| Is node a leaf? | |

| Is node an ancestor of node ? | |

| Number of leaves in the subtree with as the root | |

| Pointer to the suffix corresponding to leaf | |

| The parent of node | |

| The first child of node in alphabetic order | |

| The next sibling of node in alphabetic order | |

| The lowest common ancestor of nodes and | |

| String depth: length of the label from the root to node | |

| Tree depth: the depth of node in the suffix tree | |

| The highest ancestor of node with string depth at least | |

| The ancestor of node with tree depth | |

| Suffix link: Node such that for a character | |

| Suffix link iterated times | |

| The child of node with edge label starting with character | |

| The character |

| Operation . | Description . |

|---|---|

| The root of the tree | |

| Is node a leaf? | |

| Is node an ancestor of node ? | |

| Number of leaves in the subtree with as the root | |

| Pointer to the suffix corresponding to leaf | |

| The parent of node | |

| The first child of node in alphabetic order | |

| The next sibling of node in alphabetic order | |

| The lowest common ancestor of nodes and | |

| String depth: length of the label from the root to node | |

| Tree depth: the depth of node in the suffix tree | |

| The highest ancestor of node with string depth at least | |

| The ancestor of node with tree depth | |

| Suffix link: Node such that for a character | |

| Suffix link iterated times | |

| The child of node with edge label starting with character | |

| The character |

Typical compressed suffix tree operations.

| Operation . | Description . |

|---|---|

| The root of the tree | |

| Is node a leaf? | |

| Is node an ancestor of node ? | |

| Number of leaves in the subtree with as the root | |

| Pointer to the suffix corresponding to leaf | |

| The parent of node | |

| The first child of node in alphabetic order | |

| The next sibling of node in alphabetic order | |

| The lowest common ancestor of nodes and | |

| String depth: length of the label from the root to node | |

| Tree depth: the depth of node in the suffix tree | |

| The highest ancestor of node with string depth at least | |

| The ancestor of node with tree depth | |

| Suffix link: Node such that for a character | |

| Suffix link iterated times | |

| The child of node with edge label starting with character | |

| The character |

| Operation . | Description . |

|---|---|

| The root of the tree | |

| Is node a leaf? | |

| Is node an ancestor of node ? | |

| Number of leaves in the subtree with as the root | |

| Pointer to the suffix corresponding to leaf | |

| The parent of node | |

| The first child of node in alphabetic order | |

| The next sibling of node in alphabetic order | |

| The lowest common ancestor of nodes and | |

| String depth: length of the label from the root to node | |

| Tree depth: the depth of node in the suffix tree | |

| The highest ancestor of node with string depth at least | |

| The ancestor of node with tree depth | |

| Suffix link: Node such that for a character | |

| Suffix link iterated times | |

| The child of node with edge label starting with character | |

| The character |

Suffix tree topology representations are the main difference between the various CST proposals. While the CSAs and the LCP arrays are interchangeable, the tree representation determines how various suffix tree operations are implemented. There are three main families of CST:

Sadakane’s compressed suffix tree (CST-Sada) [5] uses a balanced parentheses representation for the tree. Each node is encoded as an opening parenthesis, followed by the encodings of its children and a closing parenthesis. This can be encoded as a bitvector of length , where is the number of nodes, requiring up to bits. CST-Sada tends to be larger and faster than the other compressed suffix trees [11, 13].

The fully compressed suffix tree (FCST) of Russo et al. [10, 14] aims to use as little space as possible. It does not require an LCP array at all, and stores a balanced parentheses representation for a sampled subset of suffix tree nodes in bits. Unsampled nodes are retrieved by following suffix links. FCST is smaller and much slower than the other CST [10, 13].

Fischer, Mäkinen and Navarro [6] proposed an intermediate representation, CST-NPR, based on lcp-intervals. Tree navigation is handled by searching for the values defining the lcp-intervals. Range minimum queries find the leftmost minimal value in , while next/previous smaller value queries / find the next/previous LCP value smaller than . After the improvements by various authors [7–9, 11, 13], the CST-NPR is perhaps the most practical compressed suffix tree.

For typical texts and component choices, the size of CST ranges from the to bytes of CST-Sada to the to bytes of FCST [11, 13]. There are also some CST variants for repetitive texts, such as versioned document collections and collections of individual genomes. Abeliuk et al. [13] developed a variant of CST-NPR that can sometimes be smaller than bits, while achieving performance similar to the FCST. Navarro and Ordez [15] used grammar-based compression for the tree representation of CST-Sada. The resulting compressed suffix tree (GCT) requires slightly more space than the CST-NPR of Abeliuk et al., while being closer to the non-repetitive CST-Sada and CST-NPR in performance.

2.3. Relative Lempel–Ziv

RLZ parsing [27] compresses target sequence relative to reference sequence . The target sequence is represented as a concatenation of phrases, where is the starting position of the phrase in the reference, is the length of the copied substring and is the mismatch character. If phrase starts from position in the target, then and .

The shortest RLZ parsing of the target sequence can be found in (essentially) linear time. The algorithm builds a CSA for the reverse of the reference sequence, and then parses the target sequence greedily by using backward searching. If the edit distance between the reference and the target is , we need at most phrases to represent the target sequence. On the other hand, because the relative order of the phrases can be different in sequences and , the edit distance can be much larger than the number of phrases in the shortest RLZ parsing.

In a straightforward implementation, the phrase pointers and the mismatch characters can be stored in arrays and . These arrays take and bits. To support random access to the target sequence, we can encode phrase lengths as a bitvector of length [27]: we set if is the first character of a phrase. The bitvector requires bits if we use the sdarray representation [60]. To extract , we first determine the phrase , with . If , we return the mismatch character . Otherwise we determine the phrase offset with a select query, and return the character .

Ferrada et al. [32] showed how, by using relative pointers instead of absolute pointers, we can avoid the use of select queries. They also achieved better compression of DNA collections, in which most of the differences between the target sequences and the reference sequence are single-character substitutions. By setting , the general case simplifies to . If most of the differences are single-character substitutions, will often be . This corresponds to with relative pointers, making run-length encoding of the pointer array worthwhile.

When we sort the suffixes in lexicographic order, substitutions in the text move suffixes around, creating insertions and deletions in the SA and related structures. In the LCP array, an insertion or deletion affecting can also change the value of . Hence, RLZ with relative pointers is not enough to compress the LCP array.

Cox et al. [61] modified Ferrada et al.’s version of RLZ to handle other small variations in addition to single-character substitutions. After adding a phrase to the parse, we look ahead a bounded number of positions to find potential phrases with a relative pointer close to the previous explicit relative pointer . If we can find a sufficiently long phrase this way, we encode the pointer differentially as . Otherwise we store explicitly. We can then save space by storing the differential pointers separately using less bits per pointer. Because there can be multiple mismatch characters between phrases and , we also need a prefix-sum data structure for finding the range containing the mismatches. Cox et al. showed that their approach compresses both DNA sequences and LCP arrays better than Ferrada et al.’s version, albeit with slightly slower random access. We refer the reader to their paper for more details of their implementation.

3. RELATIVE FMI

The relative FMI (RFM) [44] is a compressed SA of a sequence relative to the CSA of another sequence. The index is based on approximating the longest common subsequence (LCS) of and , where is the reference sequence and is the target sequence, and storing several structures based on the common subsequence. Given a representation of supporting rank and select, we can use the relative index to simulate rank and select on .

In this section, we describe the relative FMI using the notation and the terminology of this paper. We also give an explicit description of the locate and extract functionality, which was not included in the original paper. Finally, we describe a more space-efficient variant of the algorithm for building a relative FMI with full functionality.

3.1. Basic index

Assume that we have found a long common subsequence of sequences and . We call positions and lcs-positions, if they are in the common subsequence. If and are the binary sequences marking the common subsequence (), we can move between lcs-positions in the two sequences with rank and select operations. If is an lcs-position, the corresponding position in sequence is . We denote this pair of lcs-bitvectors.

In its most basic form, the relative FMI only supports find queries by simulating rank queries on . It does this by storing and the complements (subsequences of non-aligned characters) and . The lcs-bitvectors are compressed using entropy-based compression [62], while the complements are stored in structures similar to the reference .

3.2. Relative select

We can implement the entire functionality of a CSA with rank queries on the BWT. However, if we use the CSA in a compressed suffix tree, we also need select queries to support forward searching with and queries. We can always implement select queries by binary searching with rank queries, but the result will be much slower than the rank queries.

A faster alternative to support select queries in the relative FMI is to build a relative select structure rselect [63]. Let be a sequence consisting of the characters of sequence in sorted order. Alternatively, is a sequence such that . The relative select structure consists of bitvectors , where and , as well as the C array for the common subsequence.

To compute , we first determine how many of the first occurrences of character are lcs-positions with . Then we check from bit whether the occurrence we are looking for is an lcs-position or not. If it is, we find the position in as , and then map to by using . Otherwise we find the occurrence in with , and return .

3.3. Full functionality

If we want the relative FMI to support locate and extract queries, we cannot build it from any common subsequence of and . We need a bwt-invariant subsequence [44], where the alignment of the BWTs is also an alignment of the original sequences.

In addition to the structures already mentioned, the full relative FMI has another pair of lcs-bitvectors, , which marks the bwt-invariant subsequence in the original sequences. If and are lcs-positions, we set and .3

To compute the answer to a query, we start by iterating backwards with LF queries, until we find an lcs-position after steps. Then we map position to the corresponding position in by using . Finally, we determine with a locate query in the reference index, and map the result to by using .4 The result of the query is .

The access required for extract queries is supported in a similar way. We find the lcs-position for the smallest , and map it to the corresponding position by using . Then we determine by using the reference index, and map it back to with . Finally, we iterate steps backward with LF queries to find .

If the target sequence contains long insertions not present in the reference, we may also want to include some SA and ISA samples for querying those regions.

3.4. Finding a bwt-invariant subsequence

With the basic relative FMI, we approximate the longest common subsequence of and by partitioning the BWTs according to lexicographic contexts, finding the longest common subsequence for each pair of substrings in the partitioning, and concatenating the results. The algorithm is fast, easy to parallelize and quite space-efficient. As such, RFM construction is practical, having been tested with datasets of hundreds of gigabytes in size.

In the following, we describe a more space-efficient variant of the original algorithm [44] for finding a bwt-invariant subsequence. We

save space by simulating the mutual SA with and ;

match suffixes of and only if they are adjacent in ; and

run-length encode the match arrays to save space.

Letandbe two sequences, and letand. The left match of suffixis the suffix, ifandpoints to a suffix of (). The right match of suffixis the suffix, ifandpoints to a suffix of.

We simulate the mutual SA with , , and the merging bitvector of length . We set , if points to a suffix of . The merging bitvector can be built in time, where is the time required for an LF query, by extracting from and backward searching for it in [64]. Suffix has a left (right) match, if ().

Our next step is building the match arrays and , which correspond to the arrays and in the original algorithm. This is done by traversing backwards from with LF queries and following the left and the right matches of the current suffix. During the traversal, we maintain the invariant with . If suffix has a left (right) match, we use the shorthand () to refer to its position in .

We say that suffixes and have the same left match if . Let to be a maximal run of suffixes having the same left match, with suffixes to starting with the same characters as their left matches.5 We find the left match of suffix as by using , and set . The right match array is built in a similar way.

The match arrays require bits of space. If sequences and are similar, the runs in the arrays tend to be long. Hence, we can run-length encode the match arrays to save space. The traversal takes time, where and denote the time required by rank and select operations, is the number of runs in the two arrays, and is the SA sample interval in .6

The final step is determining the bwt-invariant subsequence. We find a binary sequence , which marks the common subsequence in , and a strictly increasing integer sequence , which contains the positions of the common subsequence in . This can be done by finding the longest increasing subsequence over , where we consider both and as candidates for the value at position , and using the found subsequence as . If comes from (), we set , and align suffix with its left (right) match in the bwt-invariant subsequence. We can find and in time with bits of additional working space with a straightforward modification of the dynamic programming algorithm for finding the longest increasing subsequence. The dynamic programming tables can be run-length encoded, but we found that this did not yield good time/space trade-offs.

As sequence is strictly increasing, we can convert it into binary sequence , marking for all . Afterwards, we consider the binary sequences and as the lcs-bitvectors . Because every suffix of starts with the same character as its matches stored in the and arrays, subsequences and are identical.

Note that the full relative FMI is more limited than the basic index, because it does not handle substring moves very well. Let and , for two random sequences and of length each. Because and are very similar, we can expect to find a common subsequence of length almost . On the other hand, the length of the longest bwt-invariant subsequence is around , because we can either match the suffixes of or the suffixes of in and , but not both.

4. RELATIVE SUFFIX TREE

The RST is a CST-NPR of the target sequence relative to a CST of the reference sequence. It consists of two major components: the relative FMI with full functionality and the relativeLCP (RLCP) array. The optional relative select structure can be generated or loaded from disk to speed up algorithms based on forward searching. The RLCP array is based on RLZ parsing, while the support for nsv/psv/rmq queries is based on a minima tree over the phrases.

4.1. Relative LCP array

Given LCP array , we define the differentialLCParray as and for . If for some , then is the same as , with each value incremented by [6]. This means , making the DLCP array of a repetitive text compressible with grammar-based compression [13].

We make a similar observation in the relative setting. If target sequence is similar to the reference sequence , then their LCP arrays should also be similar. If there are long identical ranges , the corresponding DLCP ranges and are also identical. Hence, we can use RLZ parsing to compress either the original LCP array or the DLCP array.

While the identical ranges are a bit longer in the LCP array, we opt to compress the DLCP array, because it behaves better when there are long repetitions in the sequences. In particular, assembled genomes often have long runs of character , which correspond to regions of very large LCP values. If the runs are longer in the target sequence than in the reference sequence, the RLZ parsing of the LCP array will have many mismatch characters. The corresponding ranges in the DLCP array typically consist of values , making them much easier to compress.

We consider DLCP arrays as strings over an integer alphabet and create an RLZ parsing of relative to . After parsing, we switch to using as the reference. The reference is stored in a structure we call slarray, which is a variant of LCP-byte. [51]. Small values are stored in a byte array, while large values are marked with a in the byte array and stored separately. To quickly find the large values, we also build a structure over the byte array. The slarray provides reasonably fast random access and fast sequential access to the underlying array.

The RLZ parsing produces a sequence of phrases (see Section 2.3; since we are using Cox et al.’s version, is now a string). Because some queries involve decompressing an entire phrase, we limit the maximum phrase length to 1024. We also require that for all , using the last character of the copied substring as a mismatch if necessary.

Phrase lengths are encoded in the bitvector in the usual way. We convert the strings of mismatching DLCP values into strings of absolute LCP values, append them into the mismatch array and store the array as an slarray. The mismatch values are used as absolute samples for the differential encoding.

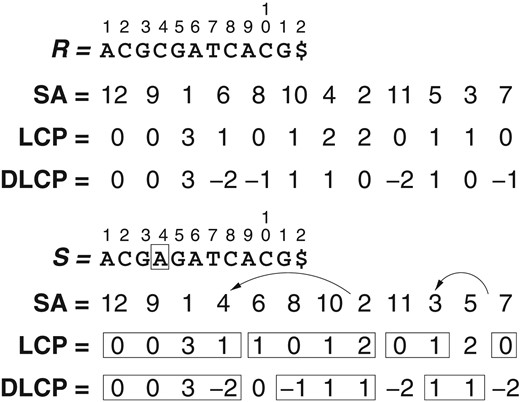

Example. Figure 1 shows an example reference sequence and target sequence , with their corresponding arrays , and . The single edit at with respect to may affect the positions of suffixes 4 and previous ones in , although in general only a limited number of preceding suffixes are affected. In our example, suffix 4 moves from position 7 in to position 4 in , and suffix 3 moves from position 11 in to position 10 in . Each suffix that is moved from to may alter the values at positions or (depending on whether or ), as well as and , of . We have surrounded in rectangles the conserved regions in (some are conserved by chance). Even some suffixes that are not moved may change their values. In turn, each change in may change values and .

After the change, we can parse into three phrases (with the copied symbols surrounded by rectangles): , , , where the latter is formed by chance. We represent this parsing as (since we store the absolute values for the mismatches), , and (or rather ).

Let us compute for . This corresponds to phrase number , which starts at position in . The corresponding position in is (or rather ), and the mapped position is . Finally, . According to our formula, then, we have .

4.2. Supporting nsv/psv/rmq queries

Suffix tree topology can be inferred from the LCP array with range minimum queries (rmq) and next/previous smaller value (nsv/psv) queries [6]. Some suffix tree operations are more efficient if we also support next/previous smaller or equal value (nsev/psev) queries [13]. Query () finds the next (previous) value smaller than or equal to .

In order to support the queries, we build a 64-ary minima tree over the phrases of the RLZ parsing. Each leaf node stores the smallest LCP value in the corresponding phrase, while each internal node stores the smallest value in the subtree. Internal nodes are created and stored in a levelwise fashion, so that each internal node, except perhaps the rightmost one of each level, has 64 children.

We encode the minima tree as two arrays. The smallest LCP values are stored in , which we encode as an slarray. Plain array stores the starting offset of each level in , with the leaves stored starting from offset . If is a minima tree node located at level , the corresponding minimum value is , the parent of the node is , and its first child is .

A range minimum query starts by finding the minimal range of phrases covering the query and the maximal range of phrases contained in the query (note that and ). We then use the minima tree to find the leftmost minimum value in , and find the leftmost occurrence in phrase . If and , we decompress phrase and find the leftmost minimum value (with ) in the phrase. If , we update . Finally, we check phrase in a similar way, if and . The answer to the range minimum query is , so we return .7 Finally, the particular case where no phrase is contained in is handled by sequentially scanning one or two phrases in .

The remaining queries are all similar to each other. In order to answer query , we start by finding the phrase containing position , and then determining . Next we scan the rest of the phrase to see whether there is a smaller value later in the phrase. If so, we return . Otherwise we traverse the minima tree to find the smallest with . We decompress phrase , find the leftmost position with , and return .

5. EXPERIMENTS

We have implemented the RST in C++, extending the old relative FMI implementation.8 The implementation is based on the Succinct Data Structure Library (SDSL) 2.0 [65]. Some parts of the implementation have been parallelized using OpenMP and the libstdc++ parallel mode.

As our reference CSA, we used the succinct SA (SSA) [58, 66] implemented using SDSL components. Our implementation is very similar to csa_wt in SDSL, but we needed better access to the internals than what the SDSL interface provides. SSA encodes the BWT as a Huffman-shaped wavelet tree, combining fast queries with size close to the order-0 empirical entropy. This makes it the index of choice for DNA sequences [57]. In addition to the plain SSA with uncompressed bitvectors, we also used SSA-RRR with entropy-compressed bitvectors [62] to highlight the the time-space trade-offs achieved with better compression

We sampled SA in suffix order and ISA in text order. In SSA, the sample intervals were 17 for SA and 64 for ISA. In RFM, we used sample interval 257 for SA and 512 for ISA to handle the regions that do not exist in the reference. The sample intervals for suffix order sampling were primes due to the long runs of character in the assembled genomes. If the number of long runs of character in the indexed sequence is even, the lexicographic ranks of almost all suffixes in half of the runs are odd, and those runs are almost completely unsampled. This can be avoided by making the sample interval and the number of runs relatively prime.

The experiments were done on a system with two 16-core AMD Opteron 6378 processors and 256 GB of memory. The system was running Ubuntu 12.04 with Linux kernel 3.2.0. We compiled all code with g++ version 4.9.2. We allowed index construction to use multiple threads, while confining the query benchmarks to a single thread. As AMD Opteron uses a non-uniform memory access architecture, accessing local memory controlled by the same physical CPU is faster than accessing remote memory controlled by another CPU. In order to ensure that all data structures are in local memory, we set the CPU affinity of the query benchmarks with the taskset utility.

As our target sequence, we used the maternal haplotypes of the 1000 Genomes Project individual NA12878 [67]. As the reference sequence, we used the 1000 Genomes Project version of the GRCh37 assembly of the human reference genome.9 Because NA12878 is female, we also created a reference sequence without chromosome Y.

In the following, a basic FMI is an index supporting only find queries, while a full index also supports locate and extract queries.

5.1. Indexes and their sizes

Table 2 lists the resource requirements for building the relative indexes, assuming that we have already built the corresponding non-relative structures for the sequences. As a comparison, building an FMI for a human genome typically takes 16–17 min and 25–26 GB of memory. While the construction of the basic RFM index is highly optimized, the other construction algorithms are just the first implementations. Building the optional rselect structures takes 4 min using two threads and around 730 megabytes ( bits) of working space in addition to RFM and rselect.

Sequence lengths and resources used by index construction for NA12878 relative to the human reference genome with and without chromosome Y. Approx and Inv denote the approximate LCS and the bwt-invariant subsequence, respectively. Sequence lengths are in millions of base pairs, while construction resources are in minutes of wall clock time and gigabytes of memory.

| . | Sequence length . | RFM (basic) . | RFM (full) . | RST . | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Reference (M) . | Target (M) . | Approx (M) . | Inv (M) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . |

| Yes | 3096 | 3036 | 2992 | 2980 | 1.42 | 4.41 | 175 | 84.0 | 629 | 141 |

| No | 3036 | 3036 | 2991 | 2980 | 1.33 | 4.38 | 173 | 82.6 | 593 | 142 |

| . | Sequence length . | RFM (basic) . | RFM (full) . | RST . | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Reference (M) . | Target (M) . | Approx (M) . | Inv (M) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . |

| Yes | 3096 | 3036 | 2992 | 2980 | 1.42 | 4.41 | 175 | 84.0 | 629 | 141 |

| No | 3036 | 3036 | 2991 | 2980 | 1.33 | 4.38 | 173 | 82.6 | 593 | 142 |

Sequence lengths and resources used by index construction for NA12878 relative to the human reference genome with and without chromosome Y. Approx and Inv denote the approximate LCS and the bwt-invariant subsequence, respectively. Sequence lengths are in millions of base pairs, while construction resources are in minutes of wall clock time and gigabytes of memory.

| . | Sequence length . | RFM (basic) . | RFM (full) . | RST . | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Reference (M) . | Target (M) . | Approx (M) . | Inv (M) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . |

| Yes | 3096 | 3036 | 2992 | 2980 | 1.42 | 4.41 | 175 | 84.0 | 629 | 141 |

| No | 3036 | 3036 | 2991 | 2980 | 1.33 | 4.38 | 173 | 82.6 | 593 | 142 |

| . | Sequence length . | RFM (basic) . | RFM (full) . | RST . | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Reference (M) . | Target (M) . | Approx (M) . | Inv (M) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . | Time (min) . | Memory (GB) . |

| Yes | 3096 | 3036 | 2992 | 2980 | 1.42 | 4.41 | 175 | 84.0 | 629 | 141 |

| No | 3036 | 3036 | 2991 | 2980 | 1.33 | 4.38 | 173 | 82.6 | 593 | 142 |

The sizes of the final indexes are listed in Table 3. The full RFM is over twice the size of the basic index, but still 3.3–3.7 times smaller than the full SSA-RRR and 4.6–5.3 times smaller than the full SSA. The RLCP array is 2.7 times larger than the RFM index with the full human reference and 1.5 times larger with the female reference. Hence having a separate female reference is worthwhile, if there are more than a few female genomes among the target sequences. The optional rselect structure is almost as large as the basic RFM index.

Various indexes for NA12878 relative to the human reference genome with and without chromosome Y. The total for RST includes the full RFM. Index sizes are in megabytes and in bits per character.

| . | SSA . | SSA-RRR . | RFM . | RST . | |||||

|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Basic . | Full . | Basic . | Full . | Basic . | Full . | RLCP . | Total . | rselect . |

| Yes | 1248 MB | 2110 MB | 636 MB | 1498 MB | 225 MB | 456 MB | 1233 MB | 1689 MB | 190 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.62 bpc | 1.26 bpc | 3.41 bpc | 4.67 bpc | 0.52 bpc | |

| No | 1248 MB | 2110 MB | 636 MB | 1498 MB | 186 MB | 400 MB | 597 MB | 997 MB | 163 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.51 bpc | 1.11 bpc | 1.65 bpc | 2.75 bpc | 0.45 bpc | |

| . | SSA . | SSA-RRR . | RFM . | RST . | |||||

|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Basic . | Full . | Basic . | Full . | Basic . | Full . | RLCP . | Total . | rselect . |

| Yes | 1248 MB | 2110 MB | 636 MB | 1498 MB | 225 MB | 456 MB | 1233 MB | 1689 MB | 190 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.62 bpc | 1.26 bpc | 3.41 bpc | 4.67 bpc | 0.52 bpc | |

| No | 1248 MB | 2110 MB | 636 MB | 1498 MB | 186 MB | 400 MB | 597 MB | 997 MB | 163 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.51 bpc | 1.11 bpc | 1.65 bpc | 2.75 bpc | 0.45 bpc | |

Various indexes for NA12878 relative to the human reference genome with and without chromosome Y. The total for RST includes the full RFM. Index sizes are in megabytes and in bits per character.

| . | SSA . | SSA-RRR . | RFM . | RST . | |||||

|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Basic . | Full . | Basic . | Full . | Basic . | Full . | RLCP . | Total . | rselect . |

| Yes | 1248 MB | 2110 MB | 636 MB | 1498 MB | 225 MB | 456 MB | 1233 MB | 1689 MB | 190 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.62 bpc | 1.26 bpc | 3.41 bpc | 4.67 bpc | 0.52 bpc | |

| No | 1248 MB | 2110 MB | 636 MB | 1498 MB | 186 MB | 400 MB | 597 MB | 997 MB | 163 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.51 bpc | 1.11 bpc | 1.65 bpc | 2.75 bpc | 0.45 bpc | |

| . | SSA . | SSA-RRR . | RFM . | RST . | |||||

|---|---|---|---|---|---|---|---|---|---|

| ChrY . | Basic . | Full . | Basic . | Full . | Basic . | Full . | RLCP . | Total . | rselect . |

| Yes | 1248 MB | 2110 MB | 636 MB | 1498 MB | 225 MB | 456 MB | 1233 MB | 1689 MB | 190 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.62 bpc | 1.26 bpc | 3.41 bpc | 4.67 bpc | 0.52 bpc | |

| No | 1248 MB | 2110 MB | 636 MB | 1498 MB | 186 MB | 400 MB | 597 MB | 997 MB | 163 MB |

| 3.45 bpc | 5.83 bpc | 1.76 bpc | 4.14 bpc | 0.51 bpc | 1.11 bpc | 1.65 bpc | 2.75 bpc | 0.45 bpc | |

Table 4 lists the sizes of the individual components of the relative FMI. Including the chromosome Y in the reference increases the sizes of almost all relative components, with the exception of and . In the first case, the common subsequence still covers approximately the same positions in as before. In the second case, chromosome Y appears in bitvector as a long run of 0-bits, which compresses well. The components of a full RFM index are larger than the corresponding components of a basic RFM index, because the bwt-invariant subsequence is shorter than the approximate longest common subsequence (see Table 2).

Breakdown of component sizes in the RFM index for NA12878 relative to the human reference genome with and without chromosome Y in bits per character.

| . | Basic RFM . | Full RFM . | ||

|---|---|---|---|---|

| ChrY . | Yes (bpc) . | No (bpc) . | Yes (bpc) . | No (bpc) . |

| RFM | 0.62 | 0.51 | 1.26 | 1.11 |

| 0.12 | 0.05 | 0.14 | 0.06 | |

| 0.05 | 0.05 | 0.06 | 0.06 | |

| 0.45 | 0.42 | 0.52 | 0.45 | |

| – | – | 0.35 | 0.35 | |

| SA samples | – | – | 0.12 | 0.12 |

| ISA samples | – | – | 0.06 | 0.06 |

| . | Basic RFM . | Full RFM . | ||

|---|---|---|---|---|

| ChrY . | Yes (bpc) . | No (bpc) . | Yes (bpc) . | No (bpc) . |

| RFM | 0.62 | 0.51 | 1.26 | 1.11 |

| 0.12 | 0.05 | 0.14 | 0.06 | |

| 0.05 | 0.05 | 0.06 | 0.06 | |

| 0.45 | 0.42 | 0.52 | 0.45 | |

| – | – | 0.35 | 0.35 | |

| SA samples | – | – | 0.12 | 0.12 |

| ISA samples | – | – | 0.06 | 0.06 |

Bold values aimed to emphasize the base structure (RFM).

Breakdown of component sizes in the RFM index for NA12878 relative to the human reference genome with and without chromosome Y in bits per character.

| . | Basic RFM . | Full RFM . | ||

|---|---|---|---|---|

| ChrY . | Yes (bpc) . | No (bpc) . | Yes (bpc) . | No (bpc) . |

| RFM | 0.62 | 0.51 | 1.26 | 1.11 |

| 0.12 | 0.05 | 0.14 | 0.06 | |

| 0.05 | 0.05 | 0.06 | 0.06 | |

| 0.45 | 0.42 | 0.52 | 0.45 | |

| – | – | 0.35 | 0.35 | |

| SA samples | – | – | 0.12 | 0.12 |

| ISA samples | – | – | 0.06 | 0.06 |

| . | Basic RFM . | Full RFM . | ||

|---|---|---|---|---|

| ChrY . | Yes (bpc) . | No (bpc) . | Yes (bpc) . | No (bpc) . |

| RFM | 0.62 | 0.51 | 1.26 | 1.11 |

| 0.12 | 0.05 | 0.14 | 0.06 | |

| 0.05 | 0.05 | 0.06 | 0.06 | |

| 0.45 | 0.42 | 0.52 | 0.45 | |

| – | – | 0.35 | 0.35 | |

| SA samples | – | – | 0.12 | 0.12 |

| ISA samples | – | – | 0.06 | 0.06 |

Bold values aimed to emphasize the base structure (RFM).

The size breakdown of the RLCP array can be seen in Table 5. Phrase pointers and phrase lengths take space proportional to the number of phrases. As there are more mismatches between the copied substrings with the full human reference than with the female reference, the absolute LCP values take a larger proportion of the total space with the full reference. Shorter phrase length increases the likelihood that the minimal LCP value in a phrase is a large value, increasing the size of the minima tree.

Breakdown of component sizes in the RLCP array for NA12878 relative to the human reference genome with and without chromosome Y. The number of phrases, average phrase length and the component sizes in bits per character. ‘Parse’ contains and , ‘Literals’ contains and , and ‘Tree’ contains and .

| ChrY . | Phrases (million) . | Length . | Parse (bpc) . | Literals (bpc) . | Tree (bpc) . | Total (bpc) . |

|---|---|---|---|---|---|---|

| Yes | 128 | 23.6 | 1.35 | 1.54 | 0.52 | 3.41 |

| No | 94 | 32.3 | 0.97 | 0.41 | 0.27 | 1.65 |

| ChrY . | Phrases (million) . | Length . | Parse (bpc) . | Literals (bpc) . | Tree (bpc) . | Total (bpc) . |

|---|---|---|---|---|---|---|

| Yes | 128 | 23.6 | 1.35 | 1.54 | 0.52 | 3.41 |

| No | 94 | 32.3 | 0.97 | 0.41 | 0.27 | 1.65 |

Breakdown of component sizes in the RLCP array for NA12878 relative to the human reference genome with and without chromosome Y. The number of phrases, average phrase length and the component sizes in bits per character. ‘Parse’ contains and , ‘Literals’ contains and , and ‘Tree’ contains and .

| ChrY . | Phrases (million) . | Length . | Parse (bpc) . | Literals (bpc) . | Tree (bpc) . | Total (bpc) . |

|---|---|---|---|---|---|---|

| Yes | 128 | 23.6 | 1.35 | 1.54 | 0.52 | 3.41 |

| No | 94 | 32.3 | 0.97 | 0.41 | 0.27 | 1.65 |

| ChrY . | Phrases (million) . | Length . | Parse (bpc) . | Literals (bpc) . | Tree (bpc) . | Total (bpc) . |

|---|---|---|---|---|---|---|

| Yes | 128 | 23.6 | 1.35 | 1.54 | 0.52 | 3.41 |

| No | 94 | 32.3 | 0.97 | 0.41 | 0.27 | 1.65 |

In order to use relative data structures, we also need to have the reference data structures in memory. The basic SSA used by the basic RFM takes 1283 MB with chromosome Y and 1248 MB without, while the full SSA used by the full RFM takes 2162 MB and 2110 MB, respectively. The reference LCP array used by the RLCP array requires 3862 MB and 3690 MB with and without chromosome Y.

5.2. Query times

Average query times for the basic operations can be seen in Tables 6 and 7. The results for LF and queries in the full FMIs are similar to the earlier ones with basic indexes [63]. Random access to the RLCP array is about 30 times slower than to the LCP array, while sequential access is 10 times slower. The nsv, psv and rmq queries are comparable with 1–2 random accesses to the RLCP array.

Average query times in microseconds for 10 million random queries in the full SSA, the full SSA-RRR and the full RFM for NA12878 relative to the human reference genome with and without chromosome Y.

| ChrY . | SSA . | SSA-RRR . | RFM . | rselect . | |||

|---|---|---|---|---|---|---|---|

| . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | (μs) . |

| Yes | |||||||

| No | |||||||

| ChrY . | SSA . | SSA-RRR . | RFM . | rselect . | |||

|---|---|---|---|---|---|---|---|

| . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | (μs) . |

| Yes | |||||||

| No | |||||||

Average query times in microseconds for 10 million random queries in the full SSA, the full SSA-RRR and the full RFM for NA12878 relative to the human reference genome with and without chromosome Y.

| ChrY . | SSA . | SSA-RRR . | RFM . | rselect . | |||

|---|---|---|---|---|---|---|---|

| . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | (μs) . |

| Yes | |||||||

| No | |||||||

| ChrY . | SSA . | SSA-RRR . | RFM . | rselect . | |||

|---|---|---|---|---|---|---|---|

| . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | LF (μs) . | (μs) . | (μs) . |

| Yes | |||||||

| No | |||||||

Query times in microseconds in the LCP array (slarray) and the RLCP array for NA12878 relative to the human reference genome with and without chromosome Y. For the random queries, the query times are averages over 100 million queries. The range lengths for the rmq queries were (for ) with probability . For sequential access, we list the average time per position for scanning the entire array.

| . | LCP array . | RLCP array . | |||||

|---|---|---|---|---|---|---|---|

| ChrY . | Random (μs) . | Sequential (μs) . | Random (μs) . | Sequential (μs) . | nsv (μs) . | psv (μs) . | rmq (μs) . |

| Yes | |||||||

| No | |||||||

| . | LCP array . | RLCP array . | |||||

|---|---|---|---|---|---|---|---|

| ChrY . | Random (μs) . | Sequential (μs) . | Random (μs) . | Sequential (μs) . | nsv (μs) . | psv (μs) . | rmq (μs) . |

| Yes | |||||||

| No | |||||||

Query times in microseconds in the LCP array (slarray) and the RLCP array for NA12878 relative to the human reference genome with and without chromosome Y. For the random queries, the query times are averages over 100 million queries. The range lengths for the rmq queries were (for ) with probability . For sequential access, we list the average time per position for scanning the entire array.

| . | LCP array . | RLCP array . | |||||

|---|---|---|---|---|---|---|---|

| ChrY . | Random (μs) . | Sequential (μs) . | Random (μs) . | Sequential (μs) . | nsv (μs) . | psv (μs) . | rmq (μs) . |

| Yes | |||||||

| No | |||||||

| . | LCP array . | RLCP array . | |||||

|---|---|---|---|---|---|---|---|

| ChrY . | Random (μs) . | Sequential (μs) . | Random (μs) . | Sequential (μs) . | nsv (μs) . | psv (μs) . | rmq (μs) . |

| Yes | |||||||

| No | |||||||

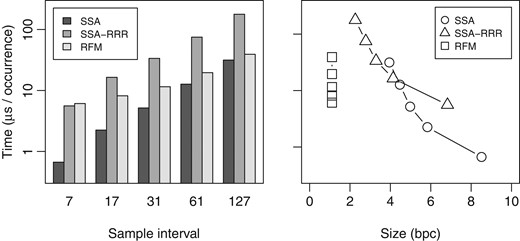

We also tested the locate performance of the full RFM index, and compared it with SSA and SSA-RRR. We built the indexes with SA sample intervals , , , and , using the reference without chromosome Y for RFM.10 The ISA sample interval was the maximum of and the SA sample interval. We extracted 2 million random patterns of length , consisting of characters , from the target sequence, and measured the total time taken by find and locate queries. The results can be seen in Fig. 2. While SSA and SSA-RRR query times were proportional to the sample interval, RFM used 5.4–7.6 μs per occurrence more than SSA, resulting in slower growth in query times. In particular, RFM with sample interval was faster than SSA with sample interval 61. As the locate performance of the RFM index is based on the sample interval in the reference, it is generally best to use dense sampling (e.g. 7 or 17), unless there are only a few target sequences.

Average find and locate times in microseconds per occurrence for 2 million patterns of length 32 with a total of 255 million occurrences on NA12878 relative to the human reference genome without chromosome Y. Left: query time vs. suffix array sample interval. Right: query time vs. index size in bits per character.

5.3. Synthetic collections

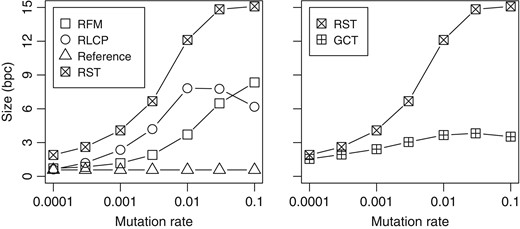

In order to determine how the differences between the reference sequence and the target sequence affect the size of relative structures, we built RST for various synthetic datasets. We took a 20 MB prefix of the human reference genome as the reference sequence, and generated 25 target sequences with every mutation rate. A total of 90% of the mutations were single-character substitutions, while 5% were insertions and another 5% deletions. The length of an insertion or deletion was with probability .

The results can be seen in Fig. 3 (left). The size of the RLCP array grew quickly with increasing mutation rates, peaking at . At that point, the average length of an RLZ phrase was comparable with what could be found in the DLCP arrays of unrelated DNA sequences. With even higher mutation rates, the phrases became slightly longer due to the smaller average LCP values. The RFM index, on the other hand, remained small until . Afterwards, the index started growing quickly, eventually overtaking the RLCP array.

Index size in bits per character vs. mutation rate for 25 synthetic sequences relative to a 20 MB reference.

We also compared the size of the RST with GCT [15], which is essentially a CST-Sada for repetitive collections. While the structures are intended for different purposes, the comparison shows how much additional space is used for providing access to the suffix trees of individual datasets. We chose to skip the CST-NPR for repetitive collections [13], as its implementation was not stable enough.

Figure 3 (right) shows the sizes of the compressed suffix trees. The numbers for RST include individual indexes for each of the 25 target sequences as well as the reference data, while the numbers for GCT are for a single index containing the 25 sequences. With low mutation rates, RST was not much larger than GCT. The size of RST starts growing quickly at around , while the size of GCT stabilizes at 3–4 bpc.

5.4. Suffix tree operations

In the final set of experiments, we compared the performance of RST with the SDSL implementations of various CST. We used the maternal haplotypes of NA12878 as the target sequence and the human reference genome without chromosome Y as the reference sequence. We built RST, CST-Sada, CST-NPR and FCST for the target sequence. CST-Sada uses Sadakane’s CSA (CSA-Sada) [54] as its CSA, while the other SDSL implementations use SSA. We used PLCP as the LCP encoding with both CST-Sada and CST-NPR, and also built CST-NPR with LCP-dac.

We used three algorithms for the performance comparison. The first algorithm is preorder traversal of the suffix tree using SDSL iterators (cst_dfs_const_forward_iterator). The iterators use operations , , , and , though queries are rare, as the iterators cache the most recent parent nodes.

The other two algorithms find the maximal substrings of the query string occurring in the indexed text, and report the lexicographic range for each such substring. This is a key task in common problems such as computing matching statistics [68] or finding maximal exact matches. The forward algorithm uses , , , and , while the backward algorithm [69] uses , and .

We used the paternal haplotypes of chromosome 1 of NA12878 as the query string in the maximal substrings algorithms. Because some tree operations in the SDSL CST take time proportional to the depth of the current node, we truncated the runs of character in the query string into a single character. Otherwise searching in the deep subtrees would have made some SDSL suffix trees much slower than RST.

The results can be seen in Table 8. RST was 1.8 times smaller than FCST and several times smaller than the other CST. In depth-first traversal, RST was four times slower than CST-NPR and about 15 times slower than CST-Sada. FCST was orders of magnitude slower, managing to traverse only 5.3% of the tree before the run was terminated after 24 h.

Compressed suffix trees for the maternal haplotypes of NA12878 relative to the human reference genome without chromosome Y. Component choices; index size in bits per character; average time in microseconds per node for preorder traversal; and average time in microseconds per character for finding maximal substrings shared with the paternal haplotypes of chromosome 1 of NA12878 using forward and backward algorithms. The figures in parentheses are estimates based on the progress made in the first 24 hours.

| . | . | . | . | . | Maximal substrings . | |

|---|---|---|---|---|---|---|

| CST . | CSA . | LCP . | Size (bpc) . | Traversal (μs) . | Forward (μs) . | Backward (μs) . |

| CST-Sada | CSA-Sada | PLCP | 12.33 | |||

| CST-NPR | SSA | PLCP | 10.79 | |||

| CST-NPR | SSA | LCP-dac | 18.08 | |||

| FCST | SSA | – | 4.98 | () | ||

| RST | RFM | RLCP | 2.75 | |||

| RST + rselect | RFM | RLCP | 3.21 | |||

| . | . | . | . | . | Maximal substrings . | |

|---|---|---|---|---|---|---|

| CST . | CSA . | LCP . | Size (bpc) . | Traversal (μs) . | Forward (μs) . | Backward (μs) . |

| CST-Sada | CSA-Sada | PLCP | 12.33 | |||

| CST-NPR | SSA | PLCP | 10.79 | |||

| CST-NPR | SSA | LCP-dac | 18.08 | |||

| FCST | SSA | – | 4.98 | () | ||

| RST | RFM | RLCP | 2.75 | |||

| RST + rselect | RFM | RLCP | 3.21 | |||

Compressed suffix trees for the maternal haplotypes of NA12878 relative to the human reference genome without chromosome Y. Component choices; index size in bits per character; average time in microseconds per node for preorder traversal; and average time in microseconds per character for finding maximal substrings shared with the paternal haplotypes of chromosome 1 of NA12878 using forward and backward algorithms. The figures in parentheses are estimates based on the progress made in the first 24 hours.

| . | . | . | . | . | Maximal substrings . | |

|---|---|---|---|---|---|---|

| CST . | CSA . | LCP . | Size (bpc) . | Traversal (μs) . | Forward (μs) . | Backward (μs) . |

| CST-Sada | CSA-Sada | PLCP | 12.33 | |||

| CST-NPR | SSA | PLCP | 10.79 | |||

| CST-NPR | SSA | LCP-dac | 18.08 | |||

| FCST | SSA | – | 4.98 | () | ||

| RST | RFM | RLCP | 2.75 | |||

| RST + rselect | RFM | RLCP | 3.21 | |||

| . | . | . | . | . | Maximal substrings . | |

|---|---|---|---|---|---|---|

| CST . | CSA . | LCP . | Size (bpc) . | Traversal (μs) . | Forward (μs) . | Backward (μs) . |

| CST-Sada | CSA-Sada | PLCP | 12.33 | |||

| CST-NPR | SSA | PLCP | 10.79 | |||

| CST-NPR | SSA | LCP-dac | 18.08 | |||

| FCST | SSA | – | 4.98 | () | ||

| RST | RFM | RLCP | 2.75 | |||

| RST + rselect | RFM | RLCP | 3.21 | |||

It should be noted that the memory access patterns of traversing CST-Sada, CST-NPR and RST are highly local. Traversal times are mostly based on the amount of computation done, while memory latency is less important than in the individual query benchmarks. In RST, the algorithm is essentially the following: (i) compute rmq in the current range; (ii) proceed recursively to the left subinterval and (iii) proceed to the right subinterval. This involves plenty of redundant work, as can be seen by comparing the traversal time ( per node) to sequential RLCP access ( per position). A faster algorithm would decompress large parts of the LCP array at once, build the corresponding subtrees in postorder [51], and traverse the resulting trees.

RST with rselect is as fast as CST-Sada in the forward algorithm, 1.8–2.7 times slower than CST-NPR, and 4.1 times faster than FCST. Without the additional structure, RST becomes 2.6 times slower. As expected [69], the backward algorithm is much faster than the forward algorithm. CST-Sada and RST, which combine slow backward searching with a fast tree, have similar performance to FCST, which combines fast searching with a slow tree. CST-NPR is about an order of magnitude faster than the others in the backward algorithm.

6. DISCUSSION

We have introduced RST, a new kind of compressed suffix tree for repetitive sequence collections. Our RST compresses the suffix tree of an individual sequence relative to the suffix tree of a reference sequence. It combines an already known relative SA with a novel relative-compressed LCP representation (RLCP). When the sequences are similar enough (e.g. two human genomes), the RST requires about 3 bits per symbol on each target sequence. This is close to the space used by the most space-efficient CST designed to store repetitive collections in a single tree, but the RST provides a different functionality as it indexes each sequence individually. The RST supports query and navigation operations within a few microseconds, which is competitive with the largest and fastest CST.

The size of RST is proportional to the amount of sequence that is present either in the reference or in the target, but not both. This is unusual for relative compression, where any additional material in the reference is generally harmless. Sorting the suffixes in lexicographic tends to distribute the additional suffixes all over the SA, creating many mismatches between the suffix-based structures of the reference and the target. For example, the 60 million suffixes from chromosome Y created 34 million new phrases in the RLZ parse of the DLCP array of a female genome, doubling the size of the RLCP array. Having multiple references (e.g. male and female) can hence be worthwhile when building relative data structures for many target sequences.

While our RST implementation provides competitive time/space trade-offs, there is still much room for improvement. Most importantly, some of the construction algorithms require significant amounts of time and memory. In many places, we have chosen simple and fast implementation options, even though there could be alternatives that require significantly less space without being too much slower.

Our RST is a relative version of the CST-NPR. Another alternative for future work is a relative CST-Sada, using RLZ compressed bitvectors for suffix tree topology and PLCP.

FUNDING

This work was supported by Basal Funds FB0001, Conicyt, Chile; Fondecyt Grant [1-170048], Chile; Academy of Finland grants [258308] and [250345] (CoECGR); the Jenny and Antti Wihuri Foundation, Finland; and the Wellcome Trust grant [098051].

REFERENCES

Footnotes

We refer to ‘long-range’ repetitiveness, where similar texts may be found far away in the text collection.

We drop the subscript if the text is evident from the context.

For simplicity, we assume that the endmarker is not a part of the bwt-invariant subsequence. Hence for all lcs-positions .

If and are lcs-positions, the corresponding lcs-positions in the original sequences are and .

The first character of a suffix can be determined by using the array.

The time bound assumes text-order sampling.

The definition of the query only calls for the leftmost minimum position . We also return , because suffix tree operations often need it.

The current implementation is available at https://github.com/jltsiren/relative-fm.

With RFM, the sample intervals apply to the reference SSA.

Author notes

Handling editor: Raphael Clifford