-

PDF

- Split View

-

Views

-

Cite

Cite

David J Armstrong, Jevgenij Gamper, Theodoros Damoulas, Exoplanet validation with machine learning: 50 new validated Kepler planets, Monthly Notices of the Royal Astronomical Society, Volume 504, Issue 4, July 2021, Pages 5327–5344, https://doi.org/10.1093/mnras/staa2498

Close - Share Icon Share

ABSTRACT

Over 30 per cent of the |$\sim$|4000 known exoplanets to date have been discovered using ‘validation’, where the statistical likelihood of a transit arising from a false positive (FP), non-planetary scenario is calculated. For the large majority of these validated planets calculations were performed using the vespa algorithm. Regardless of the strengths and weaknesses of vespa, it is highly desirable for the catalogue of known planets not to be dependent on a single method. We demonstrate the use of machine learning algorithms, specifically a Gaussian process classifier (GPC) reinforced by other models, to perform probabilistic planet validation incorporating prior probabilities for possible FP scenarios. The GPC can attain a mean log-loss per sample of 0.54 when separating confirmed planets from FPs in the Kepler Threshold-Crossing Event (TCE) catalogue. Our models can validate thousands of unseen candidates in seconds once applicable vetting metrics are calculated, and can be adapted to work with the active Transiting Exoplanet Survey Satellite (TESS) mission, where the large number of observed targets necessitate the use of automated algorithms. We discuss the limitations and caveats of this methodology, and after accounting for possible failure modes newly validate 50 Kepler candidates as planets, sanity checking the validations by confirming them with vespa using up to date stellar information. Concerning discrepancies with vespa arise for many other candidates, which typically resolve in favour of our models. Given such issues, we caution against using single-method planet validation with either method until the discrepancies are fully understood.

1 INTRODUCTION

Our understanding of exoplanets, their diversity, and population has been in large part driven by transiting planet surveys. Ground-based surveys (e.g. Bakos et al. 2002; Pollacco et al. 2006; Pepper et al. 2007; Wheatley et al. 2018) set the scene and discovered many of the first exoplanets. Planet populations, architecture, and occurrence rates were exposed by the groundbreaking Kepler mission (Borucki 2016), which to date has discovered over 2300 confirmed or validated planets, and was succeeded by its follow-on K2 (Howell et al. 2014). Now theTransiting Exoplanet Survey Satellite (TESS) mission (Ricker et al. 2015) is surveying most of the sky, and is expected to at least double the number of known exoplanets.

The planet discovery process has a number of distinct steps, which have evolved with the available data. Surveys typically produce more candidates than true planets, in some cases by a large factor. False positive (FP) scenarios produce signals that can mimic that of a true transiting planet (Santerne et al. 2013; Cabrera et al. 2017). Key FP scenarios include various configurations of eclipsing binaries, both on the target star and on unresolved background stars, which when blended with light from other stars can produce eclipses very similar to a planet transit. Systematic variations from the instrument, cosmic rays, or temperature fluctuations can produce apparently significant periodicities that are potentially mistaken for small planets, especially at longer orbital periods (Burke et al. 2015, 2019; Thompson et al. 2018).

Given the problem of separating true planetary signals from FPs, vetting methods have been developed to select the best candidates to target with often limited follow-up resources (Kostov et al. 2019). Such vetting methods look for common signs of FPs, including secondary eclipses, centroid offsets indicating background contamination, differences between odd and even transits, and diagnostic information relating to the instrument (Twicken et al. 2018). Ideally, vetted planetary candidates are observed with other methods to confirm an exoplanet, often detecting radial velocity variations at the same orbital period as the candidate transit (e.g. Cloutier et al. 2019).

With the advent of the Kepler data, a large number of generally high-quality candidates became available, but in the main orbiting faint host stars, with V magnitude >14. Such faint stars preclude the use of radial velocities to follow-up most candidates, especially for long-period low signal-to-noise cases. At this time vetting methodologies were expanded to attempt planet ‘validation’, the statistical confirmation of a planet without necessarily obtaining extra data (e.g. Morton & Johnson 2011). Statistical confirmation is not ideal compared to using independent discovery techniques, but allowed the ‘validation’ of over 1200 planets, over half of the Kepler discoveries, either through consideration of the ‘multiplicity boost’ or explicit consideration of the probability for each FP scenario. Once developed, such methods proved useful both for validating planets and for prioritizing follow-up resources, and are still in use even for bright stars where follow-up is possible (Quinn et al. 2019; Vanderburg et al. 2019).

There are several planet validation techniques in the literature: pastis (Díaz et al. 2014; Santerne et al. 2015), blender (Torres et al. 2015), vespa (Morton & Johnson 2011; Morton 2012; Morton et al. 2016), the newly released triceratops (Giacalone & Dressing 2020), and a specific consideration of Kepler ’s multiple planetary systems (Lissauer et al. 2014; Rowe et al. 2014). Each has strengths and weaknesses, but only vespa has been applied to a large number of candidates. This dependence on one method for |$\sim$|30 per cent of the known exoplanets to date introduces risks for all dependent exoplanet research fields, including in planet formation, evolution, population synthesis, and occurrence rates. In this work, we aim to introduce an independent validation method using machine learning techniques, particularly a Gaussian process classifier (GPC).

Our motivation for creating another validation technique is threefold. First, given the importance of designating a candidate planet as ’true’ or ’validated’, independent methods are desirable to reduce the risk of algorithm-dependent flaws having an unexpected impact. Second, we develop a machine learning methodology that allows near instant probabilistic validation of new candidates, once light curves and applicable metadata are available. As such our method could be used for closer to real time target selection and prioritization. Lastly, much work has been performed recently giving an improved view of the Kepler satellite target stars through Gaia, and in developing an improved understanding of the statistical performance and issues relating to Kepler discoveries (e.g. Bryson & Morton 2017; Mathur et al. 2017; Berger et al. 2018; Burke et al. 2019). We aim to incorporate this new knowledge into our algorithm and so potentially improve the reliability of our results over previous work, in particular in the incorporation of systematic non-astrophysical FPs.

We initially focus on the Kepler data set with the goal of expanding to create a general code applicable to TESS data in future work. Because of the speed of our method we are able to take the entire Threshold-Crossing Event (TCE) catalogue of Kepler candidates (Twicken et al. 2016) as our input, as opposed to the typically studied Kepler objects of interest (KOIs; Thompson et al. 2018), in essence potentially replacing a large part of the planet detection process from candidate detection to planet validation.

Past efforts to classify candidates in transit surveys with machine learning have been made, using primarily random forests (McCauliff et al. 2015; Armstrong et al. 2018; Caceres et al. 2019; Schanche et al. 2019) and convolutional neural nets (Ansdell et al. 2018; Shallue & Vanderburg 2018; Chaushev et al. 2019; Dattilo et al. 2019; Yu et al. 2019; Osborn et al. 2020). To date these have all focused on identifying FPs or ranking candidates within a survey. We build on past work by focusing on separating true planets from FPs, rather than just planetary candidates, and in doing so probabilistically to allow planet validation.

Section 2 describes the mathematical framework we employ for planet validation, and the specific machine learning models used. Section 3 defines the input data we use, how it is represented, and how we define the training set of data used to train our models. Section 4 describes our model selection and optimization process. Section 5 describes how the outputs of those models are converted into posterior probabilities, and combined with a priori probabilities for each FP scenario to produce a robust determination of the probability that a given candidate is a real planet. Section 6 shows the results of applying our methodology to the Kepler data set, and Section 7 discusses the applicability and limitations of our method, as well as its potential for other data sets.

2 FRAMEWORK

2.1 Overview

Consider training data set |$\mathcal {D} = \lbrace \boldsymbol {x}_n,s_n\rbrace _{n=1}^N$| containing N TCEs and |$\boldsymbol {x}_n \in \mathbb {R}^d$| the feature vector of vetting metrics and parameters derived from the Kepler pipeline. Let |$p(\boldsymbol {X}, \boldsymbol {s})$| be the joint density, of the feature array |$\boldsymbol {X}$|, and the generative hypothesis labels s, where |$\boldsymbol {s}$| is the array of labels (i.e. planet, or FPs such as an eclipsing binary or hierarchical eclipsing binary). Generative modelling of the joint density has been the approach taken in the previous literature for exoplanet validation, see for example pastis (Díaz et al. 2014; Santerne et al. 2015) where the generative probability for hypothesis label s has been explicitly calculated using Bayes formula.

The scenarios in question represent the full set of potential astrophysical and non-astrophysical causes of the observed candidate signal. Let P(s|I) represent the empirical prior probability that a given scenario s has to occur, where s = 1 represents a confirmed planet and s = 0 refers to the FP hypothesis, including all astrophysical and non-astrophysical FP situations that could generate the observed signal. I refers to a priori available information on the various scenarios.

The prior information I represents the overall probability for a given scenario to occur in the Kepler data set, as well as the occurrence rates of planets or binaries as a whole given the Kepler precision and target stars. In this work, I will also include centroid information determining the chance of background resolved or unresolved sources being the source of a signal. This approach allows us to easily vary the prior information given centroid information specific to a target that is not otherwise available to the models. We discuss the P(s|I) priors in detail in Section 5.4.

Prior factors dependent on an individual candidate’s parameters, including, for example, the specific occurrence rate of planets at the implied planet radius, as opposed to that on average for the whole Kepler sample, as well as the difference in probability of eclipse for planets or stars at a given orbital period and stellar or planetary radius, are incorporated directly in the model output |$p(s = 1 | \boldsymbol {x}^*)$|.

2.2 Gaussian process classifier

A commonly used set of machine learning tools is defined through parametric models such that a function describing the process belongs to a specific family of functions, i.e. linear or quadratic linear regression with a finite number of parameters. A more flexible alternatives are Bayesian non-parametric models (Rasmussen & Williams 2006) and specifically Gaussian processes (GPs), where one places a prior distribution over functions |$\boldsymbol {f}$| rather than a distribution over parameters |$\boldsymbol {w}$| of a function. We can specify a mean function value given the inputs and a kernel function that specifies the covariance of the function between two input instances.

2.3 Random forest and extra trees

Random forests (RF; Breiman 2001) are a well-known machine learning method with several desirable properties, and history in performing exoplanet transit candidate vetting (McCauliff et al. 2015). They are robust to uninformative features, allow control of overfitting, and allow measurement of the feature importance driving classification decisions. RFs are constructed using a large number of decision trees, each of which gives a classification decision based on a random subset of the input data. To keep this work as concise as possible we direct the interested reader to detailed descriptions elsewhere (Breiman 2001; Louppe 2014).

Extra trees (ET) also known as extremely randomized trees are intuitively similar in construction to RF (Geurts, Ernst & Wehenkel 2006). The only fundamental difference from RF is the feature split, where RFs perform feature splitting based on a deterministic measure such as the Gini impurity, the feature split in an ET is random.

2.4 Multilayer perceptron

A standard linear regression or classification model is based on a linear combination of instance features passed through an activation function, with non-linearity in case of classification or identity in case of regression. A multilayer perceptron (MLP) on the other hand is a set of linear transformations followed by an activation function, where the number of transformations implies the number of hidden units. Each linear transformation consists of a set number of linear combinations commonly referred to as neurons, where every neuron takes as input a linear combination from every other neuron in the previous hidden unit. The number of hidden units, neurons, and activation function are hyperparameters to choose. The interested reader should refer to Bishop (2006) for a more in depth discussion of neural networks.

3 INPUT DATA

We use Data Release 25 (DR25) of the Kepler data, covering quarters 1–17 (Twicken et al. 2016; Thompson et al. 2018). The data measure stellar brightness for near 200 000 stars for a period of 4 yr. Data and metadata were obtained from the NASA Exoplanet Archive (Akeson et al. 2013). The Kepler data are passed through the Kepler data processing pipeline (Jenkins et al. 2010; Jenkins 2017), and detrended using the Presearch Data Conditioning pipeline (Smith et al. 2012; Stumpe et al. 2012). Planetary candidates are identified by the transiting planet search part of the Kepler pipeline, which produces TCEs where candidate transits appear with a significance >7.1σ. The recovery rate of planets from this process is investigated in detail in Christiansen (2017) and Burke & Catanzarite (2017). These TCEs were then designated as Kepler KOIs if they passed several vetting checks known as the ‘data validation’ (DV) process detailed in Twicken et al. (2018). KOIs are further labelled as FPs or planets based on a combination of methods, typically either individual follow-up with other planet detection methods, the detection of transit timing variations (e.g. Panichi, Migaszewski & Goździewski 2019), or statistical validation via a number of published methods (e.g. Morton et al. 2016).

3.1 Metadata

We utilize the TCE table for Kepler DR25 (Twicken et al. 2016). This table contains 34 032 TCEs, with information on each TCE and the results of several diagnostic checks. ‘Rogue’ TCEs that were the result of a previous bug in the transit search and flagged using the ‘tce_rogue_flag’ column were removed, leaving 32 534 TCEs for this study that form the basis of our data set.

We update the TCE table with improved estimates of stellar temperature, surface gravity, metallicity, and radius derived using Gaia Data Release 2 (DR2) information (Berger et al. 2018; Gaia Collaboration et al. 2018). In each case, if no information is available for a given Kepler target in Berger et al. (2018), we fall back on the values in Mathur et al. (2017), and in cases with no information in either use the original values in the TCE table, which are from the Kepler Input Catalog (KIC; Brown et al. 2011). We also include Kepler magnitudes from the KIC. The planetary radii are updated in line with the updated stellar radii. We also recalculate the maximum ephemeris correlation, a measure of correlation between TCEs on the same stellar target (McCauliff et al. 2015) and add it to the TCE table.

One element of the TCE table is several χ2 and degrees of freedom statistics for various models fitted to the TCE signal. To better represent this test, we convert all such columns into the ratio of the χ2 to the degrees of freedom. Missing values are filled with their column median in the case of stellar magnitudes, or zeros for all other columns.

The full range of included data is shown in Table 1. This is a subset of the original TCE table, with several columns removed based on their contribution to the models as described in Section 3.3. Brief descriptions of each column are given, readers should refer to the NASA Exoplanet Archive for further detail.

Data features. GPC – Gaussian process classifier; RF – random forest; ET – extra trees; MLP – multilayer perceptron.

| Name . | Description . | In GPC . | In RF/ET/MLP . |

|---|---|---|---|

| tce_period | Orbital period of the TCE | x | x |

| tce_time0bk | Centre time of the first detected transit in BJD | x | x |

| tce_ror | Planet radius dived by the stellar radius | x | x |

| tce_dor | Planet–star distance at mid-transit divided by the stellar radius | x | x |

| tce_duration | Duration of the candidate transit (h) | x | x |

| tce_ingress | Ingress duration (h) | x | x |

| tce_depth | Transit depth (ppm) | x | x |

| tce_model_snr | Transit depth normalized by the mean flux uncertainty in transit | x | x |

| tce_robstat | A measure of depth variations across all transits | x | x |

| tce_prad | Implied planet radius | x | x |

| wst_robstat | As tce_robstat for the most significant secondary transit | x | x |

| wst_depth | Fitted depth of the most significant secondary transit | x | x |

| tce_mesmedian | See Twicken et al. (2018) | x | x |

| tce_mesmad | See Twicken et al. (2018) | x | x |

| tce_maxmes | Multiple event statistic (MES) statistic of most significant secondary transit | x | x |

| tce_minmes | MES statistic of least significant secondary transit | x | x |

| tce_maxmesd | Phase in days of most significant secondary transit | x | x |

| tce_minmesd | Phase in days of least significant secondary transit | x | x |

| tce_max_sngle_ev | Maximum single event statistic | x | x |

| tce_max_mult_ev | Maximum MES | x | x |

| tce_bin_oedp_stat | Odd–even depth comparison statistic | x | x |

| tce_rmesmad | Ratio of MES to median average deviation (MAD) MES | x | x |

| tce_rsnrmes | Ratio of signal-to-noise ratio to MES | x | x |

| tce_rminmes | Ratio of minimum MES to MES | x | x |

| tce_tce_albedostat | Significance of geometric albedo derived from secondary | x | x |

| tce_ptemp_stat | Significance of effective temperature derived from secondary | x | x |

| boot_fap | Bootstrap false alarm probability | x | x |

| tce_cap_stat | Ghost core aperture statistic | x | x |

| tce_hap_stat | Ghost halo aperture statistic | x | x |

| tce_dikco_msky | Angular offset between event centroids from KIC position | x | x |

| max_ephem_corr | Maximum ephemeris correlation | x | x |

| Kepler | Kepler magnitude | x | x |

| Teff | Host stellar temperature | x | x |

| Radius | Host stellar radius from Gaia Collaboration et al. (2018) | x | x |

| tce_model_redchisq | Transit fit model reduced χ2 | x | x |

| tce_chisq1dof1 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisq1dof2 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisqgofdofrat | See Seader et al. (2015) | x | x |

| somstat | Self-organizing map (SOM) statistic using new SOM trained on this data | x | |

| a17stat | SOM statistic using SOM of Armstrong et al. (2017) | x | |

| Local View light curve | 201 bin local view of the transit light curve | x |

| Name . | Description . | In GPC . | In RF/ET/MLP . |

|---|---|---|---|

| tce_period | Orbital period of the TCE | x | x |

| tce_time0bk | Centre time of the first detected transit in BJD | x | x |

| tce_ror | Planet radius dived by the stellar radius | x | x |

| tce_dor | Planet–star distance at mid-transit divided by the stellar radius | x | x |

| tce_duration | Duration of the candidate transit (h) | x | x |

| tce_ingress | Ingress duration (h) | x | x |

| tce_depth | Transit depth (ppm) | x | x |

| tce_model_snr | Transit depth normalized by the mean flux uncertainty in transit | x | x |

| tce_robstat | A measure of depth variations across all transits | x | x |

| tce_prad | Implied planet radius | x | x |

| wst_robstat | As tce_robstat for the most significant secondary transit | x | x |

| wst_depth | Fitted depth of the most significant secondary transit | x | x |

| tce_mesmedian | See Twicken et al. (2018) | x | x |

| tce_mesmad | See Twicken et al. (2018) | x | x |

| tce_maxmes | Multiple event statistic (MES) statistic of most significant secondary transit | x | x |

| tce_minmes | MES statistic of least significant secondary transit | x | x |

| tce_maxmesd | Phase in days of most significant secondary transit | x | x |

| tce_minmesd | Phase in days of least significant secondary transit | x | x |

| tce_max_sngle_ev | Maximum single event statistic | x | x |

| tce_max_mult_ev | Maximum MES | x | x |

| tce_bin_oedp_stat | Odd–even depth comparison statistic | x | x |

| tce_rmesmad | Ratio of MES to median average deviation (MAD) MES | x | x |

| tce_rsnrmes | Ratio of signal-to-noise ratio to MES | x | x |

| tce_rminmes | Ratio of minimum MES to MES | x | x |

| tce_tce_albedostat | Significance of geometric albedo derived from secondary | x | x |

| tce_ptemp_stat | Significance of effective temperature derived from secondary | x | x |

| boot_fap | Bootstrap false alarm probability | x | x |

| tce_cap_stat | Ghost core aperture statistic | x | x |

| tce_hap_stat | Ghost halo aperture statistic | x | x |

| tce_dikco_msky | Angular offset between event centroids from KIC position | x | x |

| max_ephem_corr | Maximum ephemeris correlation | x | x |

| Kepler | Kepler magnitude | x | x |

| Teff | Host stellar temperature | x | x |

| Radius | Host stellar radius from Gaia Collaboration et al. (2018) | x | x |

| tce_model_redchisq | Transit fit model reduced χ2 | x | x |

| tce_chisq1dof1 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisq1dof2 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisqgofdofrat | See Seader et al. (2015) | x | x |

| somstat | Self-organizing map (SOM) statistic using new SOM trained on this data | x | |

| a17stat | SOM statistic using SOM of Armstrong et al. (2017) | x | |

| Local View light curve | 201 bin local view of the transit light curve | x |

Data features. GPC – Gaussian process classifier; RF – random forest; ET – extra trees; MLP – multilayer perceptron.

| Name . | Description . | In GPC . | In RF/ET/MLP . |

|---|---|---|---|

| tce_period | Orbital period of the TCE | x | x |

| tce_time0bk | Centre time of the first detected transit in BJD | x | x |

| tce_ror | Planet radius dived by the stellar radius | x | x |

| tce_dor | Planet–star distance at mid-transit divided by the stellar radius | x | x |

| tce_duration | Duration of the candidate transit (h) | x | x |

| tce_ingress | Ingress duration (h) | x | x |

| tce_depth | Transit depth (ppm) | x | x |

| tce_model_snr | Transit depth normalized by the mean flux uncertainty in transit | x | x |

| tce_robstat | A measure of depth variations across all transits | x | x |

| tce_prad | Implied planet radius | x | x |

| wst_robstat | As tce_robstat for the most significant secondary transit | x | x |

| wst_depth | Fitted depth of the most significant secondary transit | x | x |

| tce_mesmedian | See Twicken et al. (2018) | x | x |

| tce_mesmad | See Twicken et al. (2018) | x | x |

| tce_maxmes | Multiple event statistic (MES) statistic of most significant secondary transit | x | x |

| tce_minmes | MES statistic of least significant secondary transit | x | x |

| tce_maxmesd | Phase in days of most significant secondary transit | x | x |

| tce_minmesd | Phase in days of least significant secondary transit | x | x |

| tce_max_sngle_ev | Maximum single event statistic | x | x |

| tce_max_mult_ev | Maximum MES | x | x |

| tce_bin_oedp_stat | Odd–even depth comparison statistic | x | x |

| tce_rmesmad | Ratio of MES to median average deviation (MAD) MES | x | x |

| tce_rsnrmes | Ratio of signal-to-noise ratio to MES | x | x |

| tce_rminmes | Ratio of minimum MES to MES | x | x |

| tce_tce_albedostat | Significance of geometric albedo derived from secondary | x | x |

| tce_ptemp_stat | Significance of effective temperature derived from secondary | x | x |

| boot_fap | Bootstrap false alarm probability | x | x |

| tce_cap_stat | Ghost core aperture statistic | x | x |

| tce_hap_stat | Ghost halo aperture statistic | x | x |

| tce_dikco_msky | Angular offset between event centroids from KIC position | x | x |

| max_ephem_corr | Maximum ephemeris correlation | x | x |

| Kepler | Kepler magnitude | x | x |

| Teff | Host stellar temperature | x | x |

| Radius | Host stellar radius from Gaia Collaboration et al. (2018) | x | x |

| tce_model_redchisq | Transit fit model reduced χ2 | x | x |

| tce_chisq1dof1 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisq1dof2 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisqgofdofrat | See Seader et al. (2015) | x | x |

| somstat | Self-organizing map (SOM) statistic using new SOM trained on this data | x | |

| a17stat | SOM statistic using SOM of Armstrong et al. (2017) | x | |

| Local View light curve | 201 bin local view of the transit light curve | x |

| Name . | Description . | In GPC . | In RF/ET/MLP . |

|---|---|---|---|

| tce_period | Orbital period of the TCE | x | x |

| tce_time0bk | Centre time of the first detected transit in BJD | x | x |

| tce_ror | Planet radius dived by the stellar radius | x | x |

| tce_dor | Planet–star distance at mid-transit divided by the stellar radius | x | x |

| tce_duration | Duration of the candidate transit (h) | x | x |

| tce_ingress | Ingress duration (h) | x | x |

| tce_depth | Transit depth (ppm) | x | x |

| tce_model_snr | Transit depth normalized by the mean flux uncertainty in transit | x | x |

| tce_robstat | A measure of depth variations across all transits | x | x |

| tce_prad | Implied planet radius | x | x |

| wst_robstat | As tce_robstat for the most significant secondary transit | x | x |

| wst_depth | Fitted depth of the most significant secondary transit | x | x |

| tce_mesmedian | See Twicken et al. (2018) | x | x |

| tce_mesmad | See Twicken et al. (2018) | x | x |

| tce_maxmes | Multiple event statistic (MES) statistic of most significant secondary transit | x | x |

| tce_minmes | MES statistic of least significant secondary transit | x | x |

| tce_maxmesd | Phase in days of most significant secondary transit | x | x |

| tce_minmesd | Phase in days of least significant secondary transit | x | x |

| tce_max_sngle_ev | Maximum single event statistic | x | x |

| tce_max_mult_ev | Maximum MES | x | x |

| tce_bin_oedp_stat | Odd–even depth comparison statistic | x | x |

| tce_rmesmad | Ratio of MES to median average deviation (MAD) MES | x | x |

| tce_rsnrmes | Ratio of signal-to-noise ratio to MES | x | x |

| tce_rminmes | Ratio of minimum MES to MES | x | x |

| tce_tce_albedostat | Significance of geometric albedo derived from secondary | x | x |

| tce_ptemp_stat | Significance of effective temperature derived from secondary | x | x |

| boot_fap | Bootstrap false alarm probability | x | x |

| tce_cap_stat | Ghost core aperture statistic | x | x |

| tce_hap_stat | Ghost halo aperture statistic | x | x |

| tce_dikco_msky | Angular offset between event centroids from KIC position | x | x |

| max_ephem_corr | Maximum ephemeris correlation | x | x |

| Kepler | Kepler magnitude | x | x |

| Teff | Host stellar temperature | x | x |

| Radius | Host stellar radius from Gaia Collaboration et al. (2018) | x | x |

| tce_model_redchisq | Transit fit model reduced χ2 | x | x |

| tce_chisq1dof1 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisq1dof2 | See Tenenbaum et al. (2013) and Seader et al. (2013) | x | x |

| tce_chisqgofdofrat | See Seader et al. (2015) | x | x |

| somstat | Self-organizing map (SOM) statistic using new SOM trained on this data | x | |

| a17stat | SOM statistic using SOM of Armstrong et al. (2017) | x | |

| Local View light curve | 201 bin local view of the transit light curve | x |

3.2 Light curves

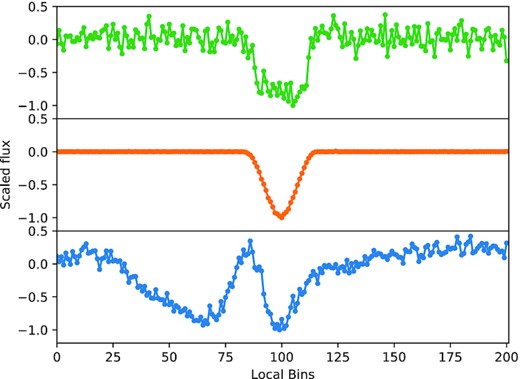

We use the DV Kepler light curves, as detailed in Twicken et al. (2018), which are produced in the same way as light curves used for the Kepler transiting planet search (TPS). The light-curve data are phase folded at the TCE ephemeris then binned into 201 equal width bins in phase covering a region of seven transit durations centred on the candidate transit. We choose these parameters following Shallue & Vanderburg (2018), their ‘local’ view, although we use a window covering one less transit duration to provide better resolution of the transit event. Example local views are shown in Fig. 1. Empty bins are filled by interpolating surrounding bins. As in Shallue & Vanderburg (2018) we also implemented a ‘global’ view using 2001 phase bins covering the entire phase-folded light curve, but in our case found no improvement in classifier performance and so dropped this view to reduce the input feature space. We hypothesize that this is due to the inclusion of additional metrics measuring the significance of secondary eclipses.

‘Local view’ 201 bin representation of the transit for a planet (top), astrophysical FP (middle), and non-astrophysical FP (bottom).

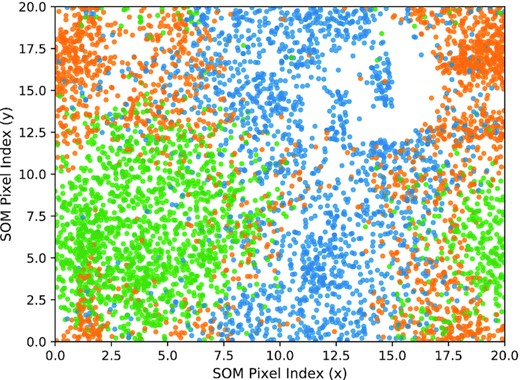

We consider several machine learning algorithms in Section 4. Some algorithms are unlikely to deal well with direct light-curve data, as it would dominate the feature space. For these we create a summary statistic for the light curves following the self-organizing map (SOM) method of Armstrong, Pollacco & Santerne (2017), applying our light curves to their publicly available Kepler SOM. We create a further SOM statistic using the same methodology but with a SOM trained on our own data set to encourage discrimination of non-astrophysical FPs that were not studied in Armstrong et al. (2017). The resulting SOM is shown in Fig. 2. These SOM statistics are a form of dimensionality reduction, reducing the light-curve shape into a single statistic.

SOM pixel locations of labelled training set light curves, showing strong clustering. Green – planet; orange – astrophysical FP; blue – non-astrophysical FP. A random jitter of between −0.5 and 0.5 pixels has been added in both axes for clarity.

For a given algorithm, either the two SOM statistics are appended to the TCE table feature set, or the ‘local’ view light curve with 201 bin values is appended. As such we have two data representations: ‘feature+SOM’ and ‘feature+LC’. The used features, and which models they apply to, are detailed in Table 1.

3.3 Minimally useful attributes

It is desirable to reduce the feature space to the minimum useful set, so as to simplify the resulting model and reduce the proportion of non-informative features passed to the models. We drop columns from the TCE table using a number of criteria. Initially metadata associated with the table are dropped, including delivery name and Kepler identifier. Columns associated with the error on another column are dropped. Columns associated with a trapezoid fit to the light curves are dropped in favour of the actual planet model fit also performed. We drop most centroid information, limiting the models to one column providing the angular offset between event centroids and the KIC position, finding that this performed better than differential measures. Columns related to the autovetter (McCauliff et al. 2015) are dropped, along with limb darkening coefficients, and the planet albedo and implied temperature are dropped in favour of their associated statistics that better represent the relevant information for planet validation. We further experimented with removing the remaining features in order to create a minimal set, finding that the results in fact marginally improved when we reduced the data table to the 38 features detailed in Table 1, in addition to the SOM features or the local view light curve.

3.4 Data scaling

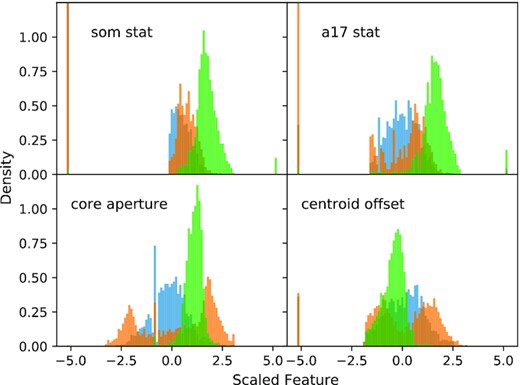

Many machine learning algorithms perform better when the input data are scaled. As such we scale each of our inputs to follow a normal distribution with a mean of zero and variance of unity for each feature. The only exceptions are the ‘local’ view light-curve values, which are already scaled. The most important four feature distributions as measured by the optimized random forest classifier (RFC) from Section 4 are plotted in Fig. 3 after scaling.

Training set distributions of the most important four features after scaling. Confirmed planets are in green, astrophysical FPs in orange, and non-astrophysical FPs in blue. The single value peaks occur due to large numbers of TCEs having identical values for a feature. The vertical axis cuts off some of the distribution in the top two panels to better show the overall distributions.

3.5 Training set dispositions

Information on the disposition of each TCE is extracted from the DR25 ordinary and supplementary KOI tables (hereafter koi-base and koi-supp, respectively). koi-base is the KOI table derived exclusively from DR25, whereas koi-supp contains a ‘best-knowledge’ disposition for each KOI. We build our confirmed planet training set by taking objects labelled as confirmed in the koi-supp table (column ‘koi_disposition’), which are in the koi-base table and not labelled as FPs or indeterminate in either Santerne et al. (2016) or Burke et al. (2019). This set includes previously validated planets. We remove a small number of apparently confirmed planets where the Kepler data have shown them to be FPs, based on the ‘koi_pdisposition’ column. We use koi-supp to give the most accurate dispositions for individual objects, prioritizing training set label accuracy over uniformly processed dispositions. This leaves 2274 TCEs labelled as confirmed planets.

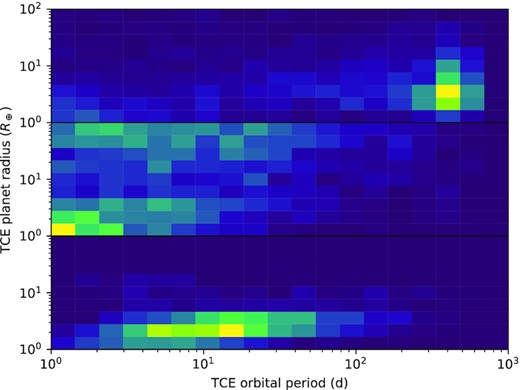

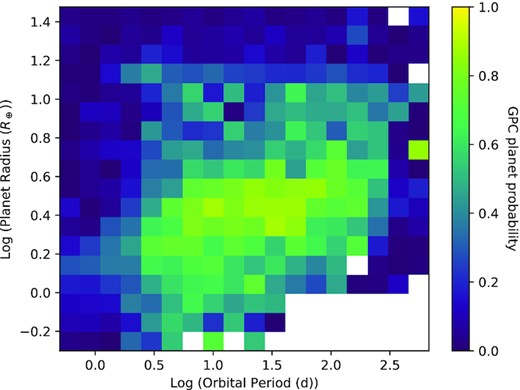

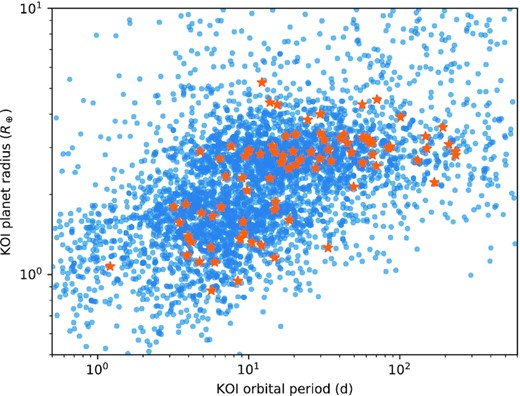

We build two FP sets, one each for astrophysical and non-astrophysical FPs. The astrophysical FP set contains all KOIs labelled FP in the koi-supp table (column ‘koi_pdisposition’), which are in the koi-base table, where there is not a flag raised indicating a non-transiting-like signal, and supplemented by all FPs in Santerne et al. (2016). The non-astrophysical FP set contains KOIs where a flag was raised indicating a non-transiting-like signal, supplemented by 2200 randomly drawn TCEs that were not upgraded to KOIs. By utilizing these random TCEs we are implicitly assuming that the TCEs that were not made KOIs are in the majority FPs, which is born out by our results (Section 6.2). The astrophysical FP set then has 3100 TCEs, and the non-astrophysical FP set has 2959 TCEs. The planet radius and period distributions of the three sets are shown in Fig. 4.

Training set planet radius and period distributions. Top: non-astrophysical FPs. Middle: astrophysical FPs. Bottom: planets. All distributions are normalized to show probability density. Training set members with apparent planet radii larger than 100 R⊕ are not plotted for clarity (all are FPs).

We combine the two FP sets going forwards, leaving a FP set with approximately double the number of the confirmed planet set. This imbalance will be corrected implicitly by some of our models, but in cases where it is not, or in case the correction is not effective, this overabundance of FPs ensures that any bias in the models prefers FP classifications. We do not include additional TCEs to avoid unbalancing the training sets further, which can impact model performance.

We split our data into a training set (80 per cent, 6663 TCEs), a validation set for model selection and optimization (10 per cent, 834 TCEs), and a test set for final analysis of model performance (10 per cent, 836 TCEs), in each case maintaining the proportions of planets to FPs. TCEs with no disposition form the ’unknown’ set (24 201 TCEs).

3.6 Training set scenario distributions

The algorithms we are building fundamentally aim to derive the probability that a given input is a member of one of the given training sets. As such the membership, information in, and distributions of the training sets are crucially important. The overall proportion of FPs relative to planets is deliberately left to be incorporated as prior information. We could attempt to include it by changing the relative numbers within the confirmed planet and FP data sets, but the number of objects in a training set is not trivially related to the output probability for most machine learning algorithms.

Another consideration is the relative distributions of object parameters within each of the planet and FP data sets. This is where the effect of, for example, planet radius on the likelihood of a given TCE being a FP will appear. By taking the confirmed and FP classifications of the koi-supp table as our input, we are implicitly building in any biases present in that table into our algorithm. We note that the table distribution is in part the real distribution of planets and FPs detected by the Kepler satellite and the detection algorithms that created the TCE and KOI lists. Incorporating that distribution is in fact desirable, given we are studying candidates found using the same process, and in that sense the Kepler set of planets and FPs is the ideal distribution to use.

The distribution of Kepler detected planets and FPs we use will however be biased by the methods used to label KOIs as planets and FPs. In particular the majority of confirmed planets and many FPs labelled in the KOI list have been validated by the vespa algorithm (|$\sim$|50 per cent of the known KOI planets), and as such biases in that algorithm may be present in our results. We compare our results to the vespa designations in Section 6.5, showing they disagree in many cases despite this reliance on vespa designations. The reliance on past classification of objects as planet or FP is a weakness of our method that we aim to improve in future work, using simulated candidates from each scenario.

A further point is the balance of astrophysical to non-astrophysical FPs in the training set. We can estimate what this should be using the ratio of KOIs to TCEs, where KOIs are |$\sim$|30 per cent of the TCE list, under the assumption that the majority of non-KOI TCEs are non-astrophysical FPs. We use a 50 per cent ratio in our training set, which effectively increases the weighting for the astrophysical FPs. This ratio improves the representation of astrophysical FPs, which is desirable given that non-astrophysical FPs are easy to distinguish given a high enough signal-to-noise ratio. We impose a multiple event statistic (MES) cut of 10.5 as recommended by Burke et al. (2019) before validating any candidate to remove the possibility of low signal-to-noise ratio instrumental FPs complicating our results.

4 MODEL SELECTION AND OPTIMIZATION

Trialled model parameters. All combinations listed were tested. Best parameters as found on the validation set are in bold.

| Model/parameter . | Options . |

|---|---|

| GPC | |

| n_inducing_points | [16,32,64,128] |

| likelihood | [bernoulli] |

| kernel | [rbf,linear,matern32,matern52, |

| polynomial (order 2 and 3)] | |

| ARD weights | [True, False] |

| RFC | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Extra trees | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Multilayer perceptron | |

| solver | [adam,sgd] |

| alpha | [1,1e-1,1e-2,1e-3,1e-4,1e-5] |

| hidden_layer_sizes | [(10,),(15,),(20,),(5,5),(5,10)] |

| learning_rate | [constant,invscaling,adaptive] |

| early_stopping | [True,False] |

| max_iter | [2000] |

| Decision tree | |

| max_depth | [10,20,30] |

| class_weight | [balanced] |

| Logistic | |

| penalty | [l2] |

| class_weight | [balanced] |

| QDA | |

| priors | [None] |

| K-NN | |

| n_neighbours | [3,5,7,9] |

| metric | [minkowski,euclidean,manhattan] |

| weights | [uniform,distance] |

| LDA | |

| priors | [None] |

| Model/parameter . | Options . |

|---|---|

| GPC | |

| n_inducing_points | [16,32,64,128] |

| likelihood | [bernoulli] |

| kernel | [rbf,linear,matern32,matern52, |

| polynomial (order 2 and 3)] | |

| ARD weights | [True, False] |

| RFC | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Extra trees | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Multilayer perceptron | |

| solver | [adam,sgd] |

| alpha | [1,1e-1,1e-2,1e-3,1e-4,1e-5] |

| hidden_layer_sizes | [(10,),(15,),(20,),(5,5),(5,10)] |

| learning_rate | [constant,invscaling,adaptive] |

| early_stopping | [True,False] |

| max_iter | [2000] |

| Decision tree | |

| max_depth | [10,20,30] |

| class_weight | [balanced] |

| Logistic | |

| penalty | [l2] |

| class_weight | [balanced] |

| QDA | |

| priors | [None] |

| K-NN | |

| n_neighbours | [3,5,7,9] |

| metric | [minkowski,euclidean,manhattan] |

| weights | [uniform,distance] |

| LDA | |

| priors | [None] |

Trialled model parameters. All combinations listed were tested. Best parameters as found on the validation set are in bold.

| Model/parameter . | Options . |

|---|---|

| GPC | |

| n_inducing_points | [16,32,64,128] |

| likelihood | [bernoulli] |

| kernel | [rbf,linear,matern32,matern52, |

| polynomial (order 2 and 3)] | |

| ARD weights | [True, False] |

| RFC | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Extra trees | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Multilayer perceptron | |

| solver | [adam,sgd] |

| alpha | [1,1e-1,1e-2,1e-3,1e-4,1e-5] |

| hidden_layer_sizes | [(10,),(15,),(20,),(5,5),(5,10)] |

| learning_rate | [constant,invscaling,adaptive] |

| early_stopping | [True,False] |

| max_iter | [2000] |

| Decision tree | |

| max_depth | [10,20,30] |

| class_weight | [balanced] |

| Logistic | |

| penalty | [l2] |

| class_weight | [balanced] |

| QDA | |

| priors | [None] |

| K-NN | |

| n_neighbours | [3,5,7,9] |

| metric | [minkowski,euclidean,manhattan] |

| weights | [uniform,distance] |

| LDA | |

| priors | [None] |

| Model/parameter . | Options . |

|---|---|

| GPC | |

| n_inducing_points | [16,32,64,128] |

| likelihood | [bernoulli] |

| kernel | [rbf,linear,matern32,matern52, |

| polynomial (order 2 and 3)] | |

| ARD weights | [True, False] |

| RFC | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Extra trees | |

| n_estimators | [300,500,1000,2000] |

| max_features | [5,6,7] |

| min_samples_split | [2,3,4,5] |

| max_depth | [None,5,10,20] |

| class_weight | [balanced] |

| Multilayer perceptron | |

| solver | [adam,sgd] |

| alpha | [1,1e-1,1e-2,1e-3,1e-4,1e-5] |

| hidden_layer_sizes | [(10,),(15,),(20,),(5,5),(5,10)] |

| learning_rate | [constant,invscaling,adaptive] |

| early_stopping | [True,False] |

| max_iter | [2000] |

| Decision tree | |

| max_depth | [10,20,30] |

| class_weight | [balanced] |

| Logistic | |

| penalty | [l2] |

| class_weight | [balanced] |

| QDA | |

| priors | [None] |

| K-NN | |

| n_neighbours | [3,5,7,9] |

| metric | [minkowski,euclidean,manhattan] |

| weights | [uniform,distance] |

| LDA | |

| priors | [None] |

Best model performance on test set, ranked by calibrated log-loss. The GPC does not require external calibration.

| Model . | AUC . | Precision . | Recall . | Log-loss . | Calibrated log-loss . |

|---|---|---|---|---|---|

| Gaussian process classifier | 0.999 | 0.984 | 0.995 | 0.54 | – |

| Random forest | 0.999 | 0.981 | 0.997 | 0.58 | 0.54 |

| Extra trees | 0.999 | 0.985 | 0.992 | 0.58 | 0.58 |

| Multilayer perceptron | 0.997 | 0.982 | 0.985 | 0.83 | 0.66 |

| K-nearest neighbours | 0.997 | 0.995 | 0.972 | 0.83 | 0.66 |

| Decision tree | 0.958 | 0.979 | 0.984 | 0.95 | 0.74 |

| Logistic regression | 0.997 | 0.988 | 0.967 | 1.12 | 1.03 |

| Quadratic discriminant analysis | 0.989 | 0.983 | 0.970 | 1.16 | 1.20 |

| Linear discriminant analysis | 0.993 | 0.982 | 0.965 | 1.32 | 1.36 |

| Model . | AUC . | Precision . | Recall . | Log-loss . | Calibrated log-loss . |

|---|---|---|---|---|---|

| Gaussian process classifier | 0.999 | 0.984 | 0.995 | 0.54 | – |

| Random forest | 0.999 | 0.981 | 0.997 | 0.58 | 0.54 |

| Extra trees | 0.999 | 0.985 | 0.992 | 0.58 | 0.58 |

| Multilayer perceptron | 0.997 | 0.982 | 0.985 | 0.83 | 0.66 |

| K-nearest neighbours | 0.997 | 0.995 | 0.972 | 0.83 | 0.66 |

| Decision tree | 0.958 | 0.979 | 0.984 | 0.95 | 0.74 |

| Logistic regression | 0.997 | 0.988 | 0.967 | 1.12 | 1.03 |

| Quadratic discriminant analysis | 0.989 | 0.983 | 0.970 | 1.16 | 1.20 |

| Linear discriminant analysis | 0.993 | 0.982 | 0.965 | 1.32 | 1.36 |

Best model performance on test set, ranked by calibrated log-loss. The GPC does not require external calibration.

| Model . | AUC . | Precision . | Recall . | Log-loss . | Calibrated log-loss . |

|---|---|---|---|---|---|

| Gaussian process classifier | 0.999 | 0.984 | 0.995 | 0.54 | – |

| Random forest | 0.999 | 0.981 | 0.997 | 0.58 | 0.54 |

| Extra trees | 0.999 | 0.985 | 0.992 | 0.58 | 0.58 |

| Multilayer perceptron | 0.997 | 0.982 | 0.985 | 0.83 | 0.66 |

| K-nearest neighbours | 0.997 | 0.995 | 0.972 | 0.83 | 0.66 |

| Decision tree | 0.958 | 0.979 | 0.984 | 0.95 | 0.74 |

| Logistic regression | 0.997 | 0.988 | 0.967 | 1.12 | 1.03 |

| Quadratic discriminant analysis | 0.989 | 0.983 | 0.970 | 1.16 | 1.20 |

| Linear discriminant analysis | 0.993 | 0.982 | 0.965 | 1.32 | 1.36 |

| Model . | AUC . | Precision . | Recall . | Log-loss . | Calibrated log-loss . |

|---|---|---|---|---|---|

| Gaussian process classifier | 0.999 | 0.984 | 0.995 | 0.54 | – |

| Random forest | 0.999 | 0.981 | 0.997 | 0.58 | 0.54 |

| Extra trees | 0.999 | 0.985 | 0.992 | 0.58 | 0.58 |

| Multilayer perceptron | 0.997 | 0.982 | 0.985 | 0.83 | 0.66 |

| K-nearest neighbours | 0.997 | 0.995 | 0.972 | 0.83 | 0.66 |

| Decision tree | 0.958 | 0.979 | 0.984 | 0.95 | 0.74 |

| Logistic regression | 0.997 | 0.988 | 0.967 | 1.12 | 1.03 |

| Quadratic discriminant analysis | 0.989 | 0.983 | 0.970 | 1.16 | 1.20 |

| Linear discriminant analysis | 0.993 | 0.982 | 0.965 | 1.32 | 1.36 |

The utilized models are described in Section 2, but readers interested in the other models are referred to the scikit-learn documentation and references therein (Pedregosa et al. 2011).

We found that while most tested models were highly successful, the best performance after calibration was shown by a RFC. It is interesting to see the relative success of even very simple models such as linear discriminant analysis (LDA), implying the underlying decision space is not overly complex. The overall success of the models is not unexpected, as we are providing the classifiers with very similar information as was often used to classify candidates as planets or FPs in the first place, and in the case of vespa validated candidates, we are adding more detailed light-curve information. We proceed with the RFC as a versatile robust algorithm, supplementing the results with classifications from the next two most successful models, ET and MLP, to guard against overfitting by any one model.

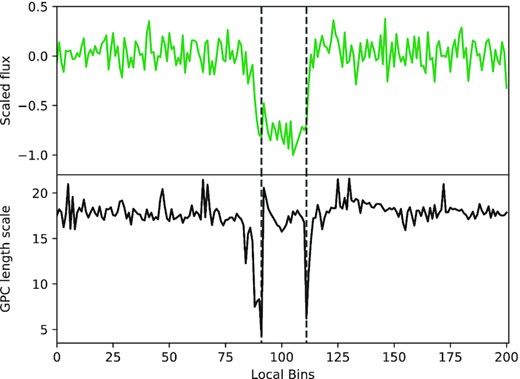

For the feature+LC input data, we utilize a GPC to provide an independent and naturally probabilistic method for comparison and to guard against overconfidence in model classifications. We implement the GPC using gpflow. The GPC is optimized varying the selected kernel function, and final performance is shown in Table 3. Additionally, we trial the GPC using variations of the input data – with the feature+SOM data, light curve and a subset of features (features+LC-light), and with the full feature+LC data set. We find the results are not strongly dependent on input data set, and hence use the feature+LC data set to provide a difference to the other models. Fig. 5 shows the GPC adapting to the input transit data. The underlying theory of a GPC was summarized in Section 2.2.

Top: local view of a planet light curve. Bottom: GPC automatic relevance determination (ARD) length scales for each of the input bins in the local view. Low length scales can be seen at ingress and egress, demonstrating that the GPC has learned to prioritize those regions of the light curve when making classifications.

5 PLANET VALIDATION

5.1 Probability calibration

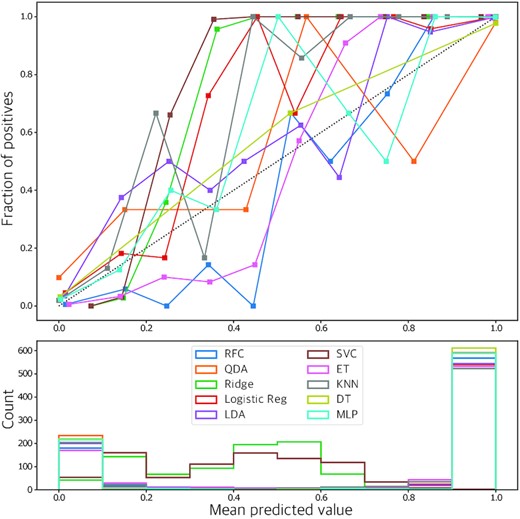

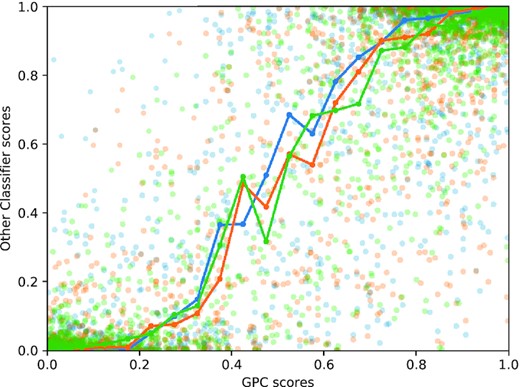

Although the GPC naturally produces probabilities as output |$p(s=1\vert \, \boldsymbol {x}^{*})$|, the other classifiers are inherently non-probabilistic models and need to have their ad hoc probabilities calibrated (Zadrozny & Elkan 2001, 2002; Niculescu-Mizil & Caruana 2005). Classifier probability calibration is typically performed by plotting the ‘calibration curve’, the fraction of class members as a function of classifier output. The uncalibrated curve is shown in Fig. 6, which highlights a counterintuitive issue; the better a classifier performs, the harder it can be to calibrate, due to a lack of objects being assigned intermediate values. Given our focus is to validate planets, we focus on accurate and precise calibration at the extreme ends, where |$p(s=1\vert \ \boldsymbol {x}^{*})\lt 0.01$| or |$p(s=1\vert \ \boldsymbol {x}^{*})\gt 0.99$|.

Top: calibration curve for the uncalibrated non-GP classifiers. The black dashed line represents perfect calibration. Bottom: histogram of classifications showing the number of candidates falling in each bin for each classifier.

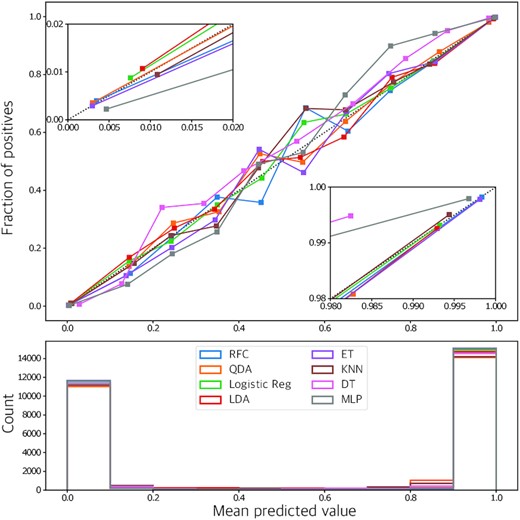

Top: calibration curve for the calibrated non-GP classifiers. The black dashed line represents perfect calibration. The two insets show zoomed plots of the low and high ends of the curve. Bottom: histogram of classifications showing the number of candidates falling in each bin for each classifier. Synthetic training set members are included in this plot.

To statistically validate a candidate as a planet, the commonly accepted threshold is |$p(s=1\vert \boldsymbol {x}^{*})\gt 0.99$| (Morton et al. 2016). Measuring probabilities to this level requires the precision of our calibration is also at least 1 per cent or better. We use the isotonic regression calibration technique (Zadrozny & Elkan 2001, 2002), which calibrates by counting samples in bins of given classifier scores. To measure the fraction of true planets in the |$p(s=1\vert \boldsymbol {x}^{*})\gt 0.99$| bin we therefore require at least N = 10 000 test planets to reduce the Poisson counting error |$\sqrt{N}/N$| below 1 per cent. Given the size of our training set additional test inputs are required for calibration.

To allow calibration at this precision, we synthesize additional examples of planets and FPs from our training set, by interpolating between members of each class. The process is only performed for the feature+SOM data set, as the GPC does not need calibration. We select a training set member at random, and then select another member of the same class that is within the 20th percentile of all the member-to-member distances within that class. Distances are calculated by considering the Euclidean distance between the values of each column for two class members. Restricting the distances in this way allows for non-trivial class boundaries in the parameter space. A new synthetic class member is then produced by interpolating between the two selected real inputs. We generate 10 000 each of planets and FPs from the training set. These synthetic data sets are used only for calibration, not to train the classifiers. The calibrated classifier curves are shown in Fig. 7.

It is important to note that by interpolating, we have essentially weakened the effect of outliers in the training data, at least for the calibration step. For this and other reasons, candidates that are outliers to our training set will not get valid classifications, and should be ignored. We describe our process for flagging outliers in Section 5.5. Interpolation also means that while we can attain the desired precision, the accuracy of the calibration may still be subject to systematic biases in the training set, which were discussed in Section 3.6.

5.2 Classifier training

The GPC was trained using the training data with no calibration. We used the gpflow (Matthews et al. 2017) python extension to tensorflow (Abadi et al. 2016), running on an Nvidia GeForce GTX Titan XP GPU. On this architecture the GPC takes less than 1 min to train, and seconds to classify new candidates.

For the other classifiers, training and calibration were performed on a 2017 generation iMac with four 4.2 GHz Intel i7 processors. Training each model takes a few minutes, with classification of new objects possible in seconds. To create calibrated versions of the other classifiers, we employ a cross-validation strategy to ensure that the training data can be used for training and calibration. The training set and synthetic data set are split into 10-folds, and on each iteration a classifier is trained on 90 per cent of the training data, then calibrated on the remaining 10 per cent of training data plus 10 per cent of the synthetic data. The process is repeated for each fold to create 10 separate classifiers, with the classifier results averaged to produce final classifications.

The above steps suffice to give results on the validation, test, and unknown data sets. We also aim to classify the training data set independently, as a sanity check and to confirm previous validations. To get results for the training data set we introduce a further layer of cross-validation, with 20-folds. For the GPC this is the only cross-validation, where the GPC is trained on 95 per cent of the training data to give a result for the remaining 5 per cent, and the process repeated to classify the whole training set. For the other classifiers we separate 5 per cent of the training data before performing the above training and calibration steps using the remaining 95 per cent, and repeat.

5.3 Positional probabilities

Part of the prior probability for an object to be a planet or FP, P(s|I), is the probability that the signal arises from the target star, a known blended star in the aperture, or an unresolved background star. We derive these values using the positional probabilities calculated in Bryson & Morton (2017), which provide the probability that the signal arises from the target star Ptarget, the probability arises from a known secondary source Psecondsource, typically another KIC target or a star in the Kepler United Kingdom InfraRed Telescope (UKIRT) survey,1 and the probability the signal arises from an unresolved background star Pbackground. Bryson & Morton (2017) also considered a small number of sources detected through high-resolution imaging; we ignore these and instead take the most up to date results from the robo-AO survey from Ziegler et al. (2018). The given positional probabilities have an associated score representing the quality of the determination; where this is below the accepted threshold of 0.3 we continue with the a priori values given by Bryson & Morton (2017), but do not validate planets where this occurs.

The calculation in Bryson & Morton (2017) was performed without Gaia DR2 (Gaia Collaboration et al. 2018) information, and so we update the positional probabilities using the new available information. We first search the Gaia DR2 data base for any detected sources within 25 arcsec of each TCE host star. We chose 25 arcsec as this is the limit considered for contaminating background sources in Bryson & Morton (2017). Gaia sources that are in the KIC (identified in Berger et al. 2018), in the Kepler UKIRT survey, or in the new robo-AO companion source list are discarded as these were either accounted for in Bryson & Morton (2017) or are considered separately in the case of robo-AO.

We then check for each TCE whether any new Gaia or robo-AO sources are bright enough to cause the observed signal, conservatively assuming a total eclipse of the blended background source. If there are such sources, we flag the TCE in our results and adjust the probability of a background source causing the signal Pbackground to account for the extra source, by increasing the local density of unresolved background stars appropriately and normalizing the set of positional probabilities given the new value of Pbackground. It would be ideal to treat the Gaia source as a known second source, but without access to the centroid ellipses for each candidate we cannot make that calculation. We do not validate TCEs with a flag raised for a detected Gaia or robo-AO companion, although we still provide results in Section 6.

5.4 Prior probabilities

5.4.1 P(FP-EB)

To calculate P(FP-EB) we need the probability of a randomly chosen star being an eclipsing binary with an orbital period P that Kepler could detect. We calculate the product fclose-binaryfeclipse using the results of Moe & Di Stefano (2017). We integrate their occurrence rate for companion stars to main-sequence solar-like hosts as a function of log P (their equation 23) multiplied by the eclipse probability at that period for a solar host star. We consider companions with log P < 2.5 (P < 320 d) and mass ratio q > 0.1, correcting from the q > 0.3 equation using a factor of 1.3 as suggested. The integration gives fclose-binaryfeclipse = 0.0048, which is strikingly lower than the planet prior, primarily due to the much lower occurrence rate for close binaries. Ignoring eclipse probability, we find the frequency of solar-like stars with companions within 320 d to be 0.055 from Moe & Di Stefano (2017). It is often stated that |$\sim$|50 per cent of stars are in multiple systems, but this fraction is dominated by wide companions with orbital periods longer than 320 d. This calculation implicitly assumes that any eclipsing binary in this period range with mass ratio greater than 0.1 would lead to a detectable eclipse in the Kepler data.

5.4.2 P(FP-HEB)

The probability that a star is a hierarchical eclipsing binary depends on the triple star fraction. In our context the product fclose-triplefeclipse is the probability for a star to be in a triple system, where the close binary component is in the background, has an orbital period short enough for Kepler to detect, and eclipses. The statistics for triple systems of this type (A-(Ba,Bb)) are extremely poor (Moe & Di Stefano 2017) due to the difficulty of reliably detecting additional companions to already lower mass companion stars. If we assume that one of the B components is near solar mass, then we can use the general close companion frequency, which is the same as fclose-binaryfeclipse, multiplied by an additional factor to account for an additional wider companion. We use the fraction of stars with any companion from Moe & Di Stefano (2017), which is fmultiple = 0.48. As such we take fclose-triplefeclipse = fmultiplefclose-binaryfeclipse. Again this calculation implicitly assumes that any such triple with mass ratios greater than 0.1 to the primary star would lead to a detectable eclipse in the Kepler data.

5.4.3 P(FP-HTP)

Unlike the stellar multiple cases it is unlikely that all background transiting planets would produce a detectable signal in the Kepler data. Estimating the fraction that do is complex and would require an estimate of the transit depth distribution for the full set of background transiting planets. Instead we proceed with the assumption that all such planets would produce a detectable signal if in a binary system, but not in systems of higher order multiplicity. fbinary = 0.27 from Moe & Di Stefano (2017) for solar-like primary components, which is largely informed by Raghavan et al. (2010). fplanetftransit = 0.0308 as calculated above. Note that we do not include any effect of multiplicity on the planet occurrence rate.

All necessary components for the remaining priors have now been discussed, although we again note that the implicit assumption that all scenarios could produce a detectable transit.

5.4.4 P(FPnon-astro)

P(FPnon-astro) is difficult to calculate, and so we follow Morton et al. (2016) in setting it to 5e-5. Recent work has suggested that the systematic false alarm rate is highly important when considering long-period small planetary candidates (Burke et al. 2019) and can be the most likely source of FPs for such candidates. The low prior rate for non-astrophysical FPs used here is justified because we apply a cut on the MES of 10.5 as recommended by Burke et al. (2019), allowing only significant candidates to be validated. At such an MES, the ratio of the systematic to planet prior is less than 10−3 (Burke et al. 2019, their fig. 3), which translates to a prior of order 10−5 when applied to our planet scenario prior.

5.4.5 Prior information in the training set

Note that the probability of the signal arising from the target star is included in our scenario prior as Ptarget. As some centroid information is included in the training data the classifiers may incorporate the probability of the signal arising from the target star internally. As such we are at risk of double counting this information in our posterior probabilities. We include positional probabilities in P(s|I) because the probabilities available from Bryson & Morton (2017) include information on nearby stars and their compatibility with the centroid ellipses derived for each TCE. This is more information than we can easily make available to the classifiers, and additionally improves interpretability by exposing the positional probabilities directly in the calculation. Removing centroid information from the classifiers would artificially reduce their performance. Including prior information on the target in both the classifiers and external prior is the conservative approach, because a significant centroid offset, or low target star positional probability, can only reduce the derived probability of a TCE being a planet.

5.5 Outlier testing

Our method is only valid for ‘inliers’, candidates that are well represented by the training set and that are not rare or unusual. We perform two tests to flag outlier TCEs, using different methodologies for independence.

The first considers outliers from the entire set of TCEs, to avoid mistakenly validating a candidate that is a unique case and hence might be misinterpreted. We implement the local outlier factor method (Breunig et al. 2000), which measures the local density of an entry in the data set with respect to its neighbours. The result is a factor that decreases as the local density drops. If that factor is particularly low, the entry is flagged as an outlier. We use a default threshold of −1.5 that labels 391 (1.2 per cent) of TCEs as outliers, 108 of which were KOIs. The local outlier factor is well suited to studying the whole data set as it is an unsupervised method that requires no separate training set.

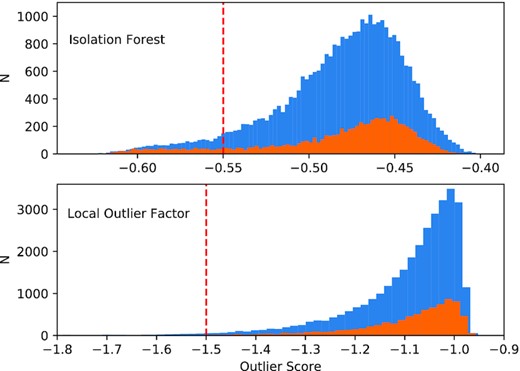

The second outlier detection method aims to find objects that are not well represented in the training set specifically. In this case we implement an isolation forest (Liu, Ting & Zhou 2008) with 500 trees, which is trained on the training set then applied to the remaining data. Fig. 8 shows the distribution of scores produced from the isolation forest, where lower scores indicate outliers. The majority of candidates show a normal distribution, with a tail of more outlying candidates. We set our threshold as −0.55 based on this distribution, which flags 1979 (6.1 per cent) of TCEs as outliers, 932 of which were KOIs.

Top: isolation forest outlier score for all TCEs in blue and KOIs in orange. The red dashed line represents the threshold for outlier flagging. Bottom: as top for local outlier factor. In both case outliers have more negative scores.

5.6 External flags

Some information is only available for a small fraction of the sample, and hence is hard to include directly in the models. In these cases, we create external flags along with our model scores, and conservatively withhold validation from planets where a warning flag is raised.

As described in Section 5.3, we flag TCEs where either Gaia DR2 or robo-AO has detected a previously unresolved companion in the aperture bright enough to cause the observed TCE. The robo-AO flag supersedes the gaia flag, in that if a source is seen in robo-AO we will not raise the Gaia flag for the same source. We also flag TCEs where the host star has been shown to be evolved in Berger et al. (2018) using the Gaia DR2 data, and include the Berger et al. (2018) binarity flag that indicates evidence for a binary companion from either the Gaia parallax or alternate high-resolution imaging.

5.7 Training set coverage

It is crucial to be aware of the content of our training set: planet types or FP scenarios that are not represented will not be well distinguished by the models. The training set here is drawn from the real detected Kepler distribution of planets and FPs, but potential biases exist for situations that are hard to disposition confidently. For example, small planets at low signal-to-noise ratio will typically remain as candidates rather than being confirmed or validated, and certain difficult FP scenarios such as transiting brown dwarfs are unlikely to be routinely recognized. In each case, such objects are likely to be more heavily represented in the unknown, non-dispositioned set.

For planets, we have good coverage of the planet set as a whole, as this is the entire confirmed planet training set, but planets in regions of parameter space where Kepler has poor sensitivity should be viewed with suspicion.

For FPs, our training set includes a large number of non-astrophysical TCEs, giving good coverage of that scenario. For astrophysical FPs, we first make sure our training set is as representative as possible by including externally flagged FPs from Santerne et al. (2016). These cases use additional spectroscopic observations to mark candidates as FPs. However the bulk of small Kepler candidates are not amenable to spectroscopic follow-up due to their stellar brightness. Utilizing the FP flags in the archive as an indicator of the FP scenario, we have 2147 FPs showing evidence of stellar eclipses, and 1779 showing evidence of centroid offset and hence background eclipsing sources. 1087 show ephemeris matches, an indicator of a visible secondary eclipse and hence a stellar source. As such we have a wide coverage of key FP scenarios.

It would be ideal to probe scenario by scenario and test the models in this fashion. Future work using specific simulated data sets will be able to explore this in more detail. Rare and difficult scenarios such as background transiting planets and transiting brown dwarfs are likely to be poorly distinguished by our or indeed any comparable method. In rare cases such as background transiting planets, which typically have transits too shallow to be detected, the effect on our overall results will be minimal. We note that these issues are equally present for currently utilized validation methods, and vespa, for example, cannot distinguish transiting brown dwarfs from planets (Morton et al. 2016).

6 RESULTS

Our classification results are given in Table 4. The table contains the classifier outputs for each TCE, calibrated if appropriate, as well as the relevant priors and final posterior probabilities adjusted by the priors. Several warning flags are included representing outliers, evolved host stars, and detected close companions. Table A1 shows the subset of Table 4 for KOIs, and includes KOI specific information and vespa probabilities calculated for DR25.

Classification results. This table describes the available columns. Full table available online.

| Column . | Description . |

|---|---|

| tce_id | Identifier composed by (KIC ID)_(TCE planet number) |

| GPC_score | Score from the GPC before priors are applied |

| MLP_score | Calibrated score from the MLP model before priors are applied |

| RFC_score | Calibrated score from the RFC before priors are applied |

| ET_score | Calibrated score from the ET model before priors are applied |

| PP_GPC | Planet probability from the GPC including priors |

| PP_RFC | Planet probability from the RFC including priors |

| PP_MLP | Planet probability from the MLP model including priors |

| PP_ET | Planet probability from the ET model including priors |

| planet | Normalized prior probability for the planet scenario |

| targetEB | Normalized prior probability for the eclipsing binary on target scenario |

| targetHEB | Normalized prior probability for the hierarchical eclipsing binary scenario |

| targetHTP | Normalized prior probability for the hierarchical transiting planet scenario |

| backgroundBEB | Normalized prior probability for the background eclipsing binary scenario |

| backgroundBTP | Normalized prior probability for the background transiting planet scenario |

| secondsource | Normalized prior probability for any FP scenario on a known other stellar source |

| nonastro | Normalized prior probability for the non-astrophysical/systematic scenario |

| Binary | Berger et al. (2018) binarity flag (0 = no evidence of binarity) |

| State | Berger et al. (2018) evolutionary state flag (0 = main sequence, 1 = subgiant, 2 = red giant) |

| gaia | Flag for new Gaia DR2 sources within 25 arcsec bright enough to cause the signal (Section 5.3) |

| roboAO | Flag for robo-AO detected sources from Ziegler et al. (2018) bright enough to cause the signal |

| MES | Multiple event statistic (MES) for the TCE. Results are valid for MES > 10.5 |

| outlier_score_LOF | Outlier score using local outlier factor on whole data set (Section 5.5) |

| outlier_score_IF | Outlier score using isolation forest focused on training set (Section 5.5) |

| class | Training set class, if any. 0 = confirmed planets, 1 = astrophysical FPs, 2 = non-astrophysical FPs |

| Column . | Description . |

|---|---|

| tce_id | Identifier composed by (KIC ID)_(TCE planet number) |

| GPC_score | Score from the GPC before priors are applied |

| MLP_score | Calibrated score from the MLP model before priors are applied |

| RFC_score | Calibrated score from the RFC before priors are applied |

| ET_score | Calibrated score from the ET model before priors are applied |

| PP_GPC | Planet probability from the GPC including priors |

| PP_RFC | Planet probability from the RFC including priors |

| PP_MLP | Planet probability from the MLP model including priors |

| PP_ET | Planet probability from the ET model including priors |

| planet | Normalized prior probability for the planet scenario |

| targetEB | Normalized prior probability for the eclipsing binary on target scenario |

| targetHEB | Normalized prior probability for the hierarchical eclipsing binary scenario |

| targetHTP | Normalized prior probability for the hierarchical transiting planet scenario |

| backgroundBEB | Normalized prior probability for the background eclipsing binary scenario |

| backgroundBTP | Normalized prior probability for the background transiting planet scenario |

| secondsource | Normalized prior probability for any FP scenario on a known other stellar source |

| nonastro | Normalized prior probability for the non-astrophysical/systematic scenario |

| Binary | Berger et al. (2018) binarity flag (0 = no evidence of binarity) |

| State | Berger et al. (2018) evolutionary state flag (0 = main sequence, 1 = subgiant, 2 = red giant) |

| gaia | Flag for new Gaia DR2 sources within 25 arcsec bright enough to cause the signal (Section 5.3) |

| roboAO | Flag for robo-AO detected sources from Ziegler et al. (2018) bright enough to cause the signal |

| MES | Multiple event statistic (MES) for the TCE. Results are valid for MES > 10.5 |

| outlier_score_LOF | Outlier score using local outlier factor on whole data set (Section 5.5) |

| outlier_score_IF | Outlier score using isolation forest focused on training set (Section 5.5) |

| class | Training set class, if any. 0 = confirmed planets, 1 = astrophysical FPs, 2 = non-astrophysical FPs |

Classification results. This table describes the available columns. Full table available online.

| Column . | Description . |

|---|---|

| tce_id | Identifier composed by (KIC ID)_(TCE planet number) |

| GPC_score | Score from the GPC before priors are applied |

| MLP_score | Calibrated score from the MLP model before priors are applied |

| RFC_score | Calibrated score from the RFC before priors are applied |

| ET_score | Calibrated score from the ET model before priors are applied |

| PP_GPC | Planet probability from the GPC including priors |

| PP_RFC | Planet probability from the RFC including priors |

| PP_MLP | Planet probability from the MLP model including priors |

| PP_ET | Planet probability from the ET model including priors |

| planet | Normalized prior probability for the planet scenario |

| targetEB | Normalized prior probability for the eclipsing binary on target scenario |

| targetHEB | Normalized prior probability for the hierarchical eclipsing binary scenario |

| targetHTP | Normalized prior probability for the hierarchical transiting planet scenario |

| backgroundBEB | Normalized prior probability for the background eclipsing binary scenario |

| backgroundBTP | Normalized prior probability for the background transiting planet scenario |

| secondsource | Normalized prior probability for any FP scenario on a known other stellar source |

| nonastro | Normalized prior probability for the non-astrophysical/systematic scenario |

| Binary | Berger et al. (2018) binarity flag (0 = no evidence of binarity) |

| State | Berger et al. (2018) evolutionary state flag (0 = main sequence, 1 = subgiant, 2 = red giant) |

| gaia | Flag for new Gaia DR2 sources within 25 arcsec bright enough to cause the signal (Section 5.3) |

| roboAO | Flag for robo-AO detected sources from Ziegler et al. (2018) bright enough to cause the signal |

| MES | Multiple event statistic (MES) for the TCE. Results are valid for MES > 10.5 |

| outlier_score_LOF | Outlier score using local outlier factor on whole data set (Section 5.5) |

| outlier_score_IF | Outlier score using isolation forest focused on training set (Section 5.5) |

| class | Training set class, if any. 0 = confirmed planets, 1 = astrophysical FPs, 2 = non-astrophysical FPs |

| Column . | Description . |

|---|---|

| tce_id | Identifier composed by (KIC ID)_(TCE planet number) |

| GPC_score | Score from the GPC before priors are applied |

| MLP_score | Calibrated score from the MLP model before priors are applied |

| RFC_score | Calibrated score from the RFC before priors are applied |

| ET_score | Calibrated score from the ET model before priors are applied |

| PP_GPC | Planet probability from the GPC including priors |

| PP_RFC | Planet probability from the RFC including priors |

| PP_MLP | Planet probability from the MLP model including priors |

| PP_ET | Planet probability from the ET model including priors |

| planet | Normalized prior probability for the planet scenario |

| targetEB | Normalized prior probability for the eclipsing binary on target scenario |

| targetHEB | Normalized prior probability for the hierarchical eclipsing binary scenario |

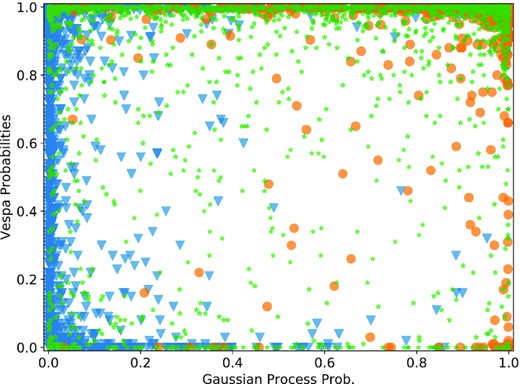

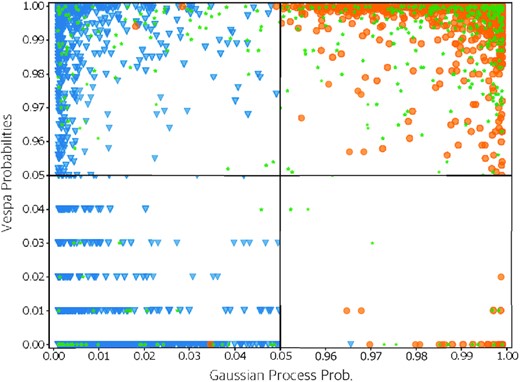

| targetHTP | Normalized prior probability for the hierarchical transiting planet scenario |